No, Apple Didn’t Block ‘Fortnite’ From the E.U. App Store

Epic Games on X early on Friday morning:

Apple has blocked our Fortnite submission so we cannot release to the US App Store or to the Epic Games Store for iOS in the European Union. Now, sadly, Fortnite on iOS will be offline worldwide until Apple unblocks it.

People have asked me since my update earlier this month, when I called Epic Games a dishonest company, why I would say so. Here’s a great example: Apple never blocked Apple’s “Fortnite” submission on iOS, either in the United States or the European Union, but the company has reported it as being blocked nearly everywhere, including to those moronic content farm “news” accounts all over social media. This is a downright lie from Epic. Here’s the relevant snippet from a letter Apple sent to Epic that Epic itself made public:

As you are well aware, Apple has previously denied requests to reinstate the Epic Games developer account, and we have informed you that Apple will not revisit that decision until after the U.S. litigation between the parties concludes. In our view, the same reasoning extends to returning Fortnite to the U.S. storefront of the App Store regardless of which Epic-related entity submits the app. If Epic believes that there is some factual or legal development that warrants further consideration of this position, please let us know in writing. In the meantime, Apple has determined not to take action on the Fortnite app submission until after the Ninth Circuit rules on our pending request for a partial stay of the new injunction.

Apple did not approve or reject Epic’s app update, which it submitted to both the E.U. and U.S. App Stores last week, causing the update to be held up indefinitely in App Review. When Epic says it cannot “release… to the Epic Games Store for iOS in the European Union,” it specifically means this latest release, which was also sent to the United States. “Fortnite” is still available on iOS in the European Union; it just happens to be that the latest patch hasn’t been reviewed. But if any unsuspecting “Fortnite” player spent any time on social media in the last day, they wouldn’t know that — Apple is just portrayed as an evil antagonist playing games again. For once, that’s incorrect.

Epic then wrote back to the judge in the Epic Games v. Apple lawsuit, Judge Yvonne Gonzalez Rogers, asking for yet another injunction as it thinks this is somehow a violation of the first admonishment from late April. From Epic’s petition to the court:

Apple’s refusal to consider Epic’s Fortnite submission is Apple’s latest attempt to circumvent this Court’s Injunction and this Court’s authority. Epic therefore seeks an order enforcing the Injunction, finding Apple in civil contempt yet again, and requiring Apple to promptly accept any compliant Epic app, including Fortnite, for distribution on the U.S. storefront of the App Store.

As I wrote in my update earlier this month calling Epic a company of liars, Judge Gonzalez Rodgers’ injunction was scathing toward Apple, but it went short of forcing it to allow Epic back onto the App Store. That’s because Epic was found liable for breach of contract and was ordered to pay Apple “30% of the $12,167,719 in revenue Epic Games collected from users in the Fortnite app on iOS through Epic Direct Payment between August and October 2020, plus (ii) 30% of any such revenue Epic Games collected from November 1, 2020 through the date of judgment, and interest according to law.” I pulled that quote directly from the judge’s 2021 decision when she ruled on Apple’s counterclaims. Apple was explicitly not required to reinstate Epic’s developer account, and that remained true even after the April injunction. They’re different parts of the same lawsuit.

Obviously Epic is trying to get this by, but Judge Gonzalez Rogers isn’t an idiot. The April injunction ruled on the one count (of 10) that Apple lost in the 2021 decision, but it did not modify the original ruling. Apple was still found not liable on nine of 10 counts brought by Epic, and it won the counterclaim of breach of contract, which pertains to Epic’s developer account. Here’s a quote from the 2021 decision:

Because Apple’s breach of contract claim is also premised on violations of [Developer Program License Agreement] provisions independent of the anti-steering provisions, the Court finds and concludes, in light of plaintiff’s admissions and concessions, that Epic Games has breached these provisions of the DPLA and that Apple is entitled to relief for these violations.

“Apple is entitled to relief for those violations.” Interesting. Notice how the 2021 order rules extensively on this matter, whereas this year’s injunction includes nothing of the sort. That’s because the April ruling only affected the one count where Apple was indeed found liable — violation of the California Unfair Competition Law. The court’s mandated remedy for that count was opening the App Store to third-party payment processors; it says nothing about bringing Epic back to the App Store.

Epic is an attention-seeking video game monopoly, and Tim Sweeney, its chief executive, is a lying narcissist whose publicity stunts are unbearable to watch. I’ll be truly shocked if Judge Yvonne Gonzalez goes against her 2021 order and forces Apple to let Epic back on the store in the United States.

There is an argument for Apple acting nice and letting Epic back on, regardless of the judge’s decision, to preserve its brand image. While I agree it should let external payment processors on the store purely out of self-defense, irrespective of how the court rules on appeal, I disagree that it should capitulate to Epic, Spotify, or any of these thug companies. If Epic really wanted to use its own payment processor in “Fortnite” back in 2020, it should’ve just sued Apple without breaking the rules of the App Store. Apple wouldn’t have had any reason to remove it from the App Store, and it would be able to take advantage of the new App Store rules made a few weeks ago. Epic is run by a petulant brat; self-respecting adults don’t break the rules and play the victim when they get caught.

If Apple lets Epic back on the store, it sets a new precedent: that any company can break Apple’s rules, sue it, and run any scam on the App Store. What if some scum developer started misusing people’s credit cards, sued Apple to get its developer account back after it got caught, and banked on public support to get back on the store because Apple cares about its “public image?” Bullying a company for enforcing its usually well-intentioned rules — even if they may be illegal now — is terrible because it negates all of the rules. Epic broke the rules. It cheated. It lied. It’s run by degenerates. Liars should never be let on any public marketplace — let alone the most valuable one in the nation.

Google Announces Android Updates Ahead of I/O

Allison Johnson, reporting for The Verge:

Google just announced a bold new look for Android, for real this time. After a false start last week when someone accidentally published a blog post too early (oh, Google!), the company is formally announcing the design language known as Material Three Expressive. It takes the colorful, customizable Material You introduced with Android 12 in an even more youthful direction, full of springy animations, bold fonts, and vibrant color absolutely everywhere. It’ll be available in an update to the Android 16 beta later this month…

But the splashy new design language is the update’s centerpiece. App designers have new icon shapes, type styles, and color palettes at their disposal. Animations are designed to feel more “springy,” with haptics to underline your actions when you swipe a notification out of existence.

The new design, frankly, is gorgeous. Don’t get me wrong: I like minimalist, simple user interfaces, but the beautiful burst of color, large buttons, and rounded shapes throughout the new operating system look distinctive and so uniquely Google. Gone are the days of Google design looking dated and boring — think Google Docs or Gmail, which both look at least six years past their prime — and I’m excited Google has decided to usher in a new, bold, exciting design era for the world’s most-used operating system.

But that’s where the plan begins to fall apart. Most Android apps flat-out refuse to support Google’s new design standards whenever they come out. It’s somewhat the same situation on iOS, where major developers like Uber, Meta, or even Google itself fail to support the native iOS design paradigms, but iOS has a much more vibrant app scene, and opinionated developers try to use the native OS design. Examples include Notion, Craft, Fantastical, and ChatGPT, all of which are styled just like any Apple-made app. When the new Apple OS redesign comes this fall, I expect all of those apps will be updated on Day 1 to support the new look. The same can’t be said for Android apps, which often diverge significantly from the “stock Android” design.

I put “stock Android” in quotes because this really isn’t stock Android. The base open-source version of the operating system is un-styled and isn’t pleasant to use. This is the Google version of Android, but because Google makes Android, people refer to this as the original, “vanilla” Android. Other smartphone manufacturers like Samsung wrap Android with their own software skin, like One UI, which I find unspeakably abhorrent. Everything about One UI disgusts me. It lacks taste and character in every way the “stock Android” of 10 years ago did. When Samsung inevitably updates One UI in a year (or probably longer) to support the new features, it’ll probably ditch half of the new styling and replace it with whatever Samsung thinks looks nice.

This is why Android apps rarely support the Google design ethos — because they must look good on every Android device, whether it’s by Google, Nothing, Samsung, or whoever else. That’s a shame because it defeats the point of such a wonderful redesign like Material 3 Expressive, which in part was created to unify the design throughout the OS. All of Google’s images from the “Android Show” keynote Tuesday morning showed every app carrying the same accent and background colors, button shapes, and other interface elements, but that’s hardly realistic. Thanks to Android hardware makers like Samsung, Android has always felt like a convention of independent software where every booth looks different as opposed to a cohesive OS.

Speaking of Samsung, this comment from David Imel, a host of the “Waveform Podcast,” stuck out to me:

You always have to wonder what behind-the-scenes deals had to have happened for Google to use the S24/S25 Ultra as the presentation device in all its keynotes for the last year.

I don’t know if they’re deals as much as it’s Google proving its competitiveness. I asked basically the same question and most of my replies basically came down to, “The Google Pixel isn’t a popular device and Google wants to showcase other Android phones as a means to embrace the competition.” It really is a shame Google is under so much regulatory scrutiny (thanks to its own doing), though, because the Pixel is the best Android phone in my book, and it ought to be displayed in all of Google’s keynotes. The most direct competition to the iPhone, I feel, is not any of Samsung’s high-end flagships, but the Google Pixel line because Pixels bridge hardware and software just like iPhones. Gemini runs best on Google Tensor processors, and the interface isn’t cluttered and messed up by One UI. Johnson says the Android redesign is meant to attract teenagers, and the best device for that in the Android world is the Pixel. It operates just like the iPhone.

When Samsung and Google do work together, they make amazing products. Here’s Victoria Song, also for The Verge:

After a few years of iterative updates, Wear OS 6 is shaping up to be a significant leap forward. For starters, Gemini will replace Google Assistant on the wrist alongside a big Material 3 Expressive redesign that takes advantage of circular watch faces…

Williams says that adding Gemini is more than just replacing Assistant, which is already available on many Wear OS watches. Like most generative AI, one of the benefits is better natural language interactions, meaning you won’t have to speak your commands just so. Gemini in Wear OS will also interact with other apps. For example, you can ask about restaurant reservations, and Gemini will reference your Gmail for that information. Williams also says it’ll understand more complex queries, like summarizing information. You can also still use complications, the app launcher, a button shortcut or say “Hey Google” to access Gemini.

Wear OS these days is a joint venture between Samsung and Google, and thus, doesn’t have the same design disparity as Android. Nearly all Wear OS devices with Google Assistant will receive Gemini support, and all Wear OS 6 watches will get Material 3 Expressive (terrible name), regardless of who they’re made by. This shoves the knife deeper into Apple’s back — the Apple Watch isn’t even planned to receive the “more personalized Siri,” supposedly coming “later this year”1 while Google’s smartwatches all can use one of the best large language models in the world. I don’t even think there’s a ChatGPT app on the Apple Watch. Don’t get me wrong, I still think the Apple Watch is the best smartwatch on the planet by a long shot, but add this to the pile of artificial intelligence features Apple has to get started on.

-

Imel also remarked about the “later this year” quality of many of Google’s Android updates announced Tuesday:

Bring back “Launching today” or “Available now” at tech events. “Later this year” kills 100% of the hype.

Technology journalists have to learn that “later this year” means nothing — it’s complete nonsense. We’ve been burned by Apple once and Google far too many times. It should kill the hype because hype should only exist for products that exist. ↩︎

iPhone Rumors: Foldable, All-Screen, Price Increase, New Release Schedule

Mark Gurman, reporting for Bloomberg in his Power On newsletter:

The good news is, an Apple product renaissance is on the way — it just won’t happen until around 2027. If all goes well, Apple’s product road map should deliver a number of promising new devices in that period, in time for the iPhone’s 20-year anniversary.

Here’s what’s coming by then:

- Apple’s first foldable iPhone, which some at the company consider one of two major two-decade anniversary initiatives, should be on the market by 2027. This device will be unique in that the typical foldable display crease is expected to be nearly invisible.

- Later in the year, a mostly glass, curved iPhone — without any cutouts in the display — is due to hit. That will mark the 10-year anniversary of the iPhone X, which kicked off the transition to all-screen, glass-focused iPhone designs.

- We should also have the first smart glasses from Apple. As I reported this past week, the company is planning to manufacture a dedicated chip for such a device by 2027. The product will operate similarly to the popular Meta Ray-Bans, letting Apple leverage its expertise in audio, miniaturization, and design. Given the company’s strengths, it’s surprising that Meta Platforms Inc. got the jump on Apple in this area.

2027 is shaping up to be a major year for Apple products. I’m excited about the foldable iPhone, though I’m also intrigued to hear more about the full-screen iPhone — Gurman reported on it last week as only including a single hole-punch camera with the Face ID components hidden under the screen. Astute Apple observers will remember this as being one of the original (leaked) plans for iPhone 14 Pro before it was eventually (leaked as being) modified to include the modern sensor array now part of the Dynamic Island. I personally have no animosity toward the current Dynamic Island and don’t think it’s too obtrusive, especially since that area would still presumably be used for Live Activity and other information when the all-screen design comes to market in a few years.

Rumors about the folding iPhone concept have been all over the place. Some reporters have asserted it’ll run an iPadOS clone, while others have said it’ll be more Mac-like, perhaps running a more desktop-like operating system. I’m not sure which rumors to believe — or even if the device Gurman is describing is the foldable iPad device that has been leaked ad nauseam — but I’m eager to at least try out this device, whatever it may be called. I don’t have a need for a foldable iPhone currently, but if it runs iPadOS when folded out, I might just ditch my iPad Pro for it, especially since it’s rumored to cost much more than the iPhone or iPad Pro.

Gurman also writes how he’s surprised Meta got ahead of Apple in the smart glasses space. I’m not at all: Meta has been working on this for years now as part of its “metaverse” Reality Labs project, while Apple has spent the same time getting Apple Vision Pro on the market. Both are abject failures — it’s just that Apple was able to eloquently pivot away from the metaverse while Apple was preparing the Apple Vision Pro hardware in 2023, as the artificial intelligence craze came around. Frankly, 2027 is too far away for an Apple version of the Meta Ray-Ban glasses. In an ideal world, such a product should come by spring 2026 at the latest, while a truly augmented-reality, visionOS-powered one should arrive in 2027. I’m willing to cut Apple at least a bit of slack for taking a while to pivot away from virtual reality to AR since that’s a tough transition to nail, especially since I don’t think Meta will do it particularly gracefully this fall. But voice assistant-powered smart glasses are table stakes — and this is coming from an undeniable Meta hater.

Now for some more immediate matters. Rolfe Winkler and Yang Jie, reporting for The Wall Street Journal (Apple News+):

Apple is weighing price increases for its fall iPhone lineup, a step it is seeking to couple with new features and design changes, according to people familiar with the matter.

The company is determined to avoid any scenario in which it appears to attribute price increases to U.S. tariffs on goods from China, where most Apple devices are assembled, the people said.

The U.S. and China agreed Monday to suspend most of the tariffs they had imposed on each other in a tit-for-tat trade war. But a 20% tariff that President Trump imposed early in his second term on Chinese goods, citing what he said was Beijing’s role in the fentanyl trade, remains in place and covers smartphones.

Trump had exempted smartphones and some other electronics products from a separate “reciprocal” tariff on Chinese goods, which will temporarily fall to 10% from 125% under Monday’s trade deal.

Someone should tell Qatar that bribery doesn’t do much good even in the Trump administration. This detail is my favorite in the whole article:

At the same time, company executives are wary of blaming increases on tariffs. When a news report in April said Amazon might show the impact of tariffs to its shoppers, the White House called it a hostile act, and Amazon quickly said the idea “was never approved and is not going to happen.”

Cowards and jokers — all of them. The Journal reports Apple executives plan to blame the price increase on new shiny features coming to the iPhone supposedly this year, but they’re struggling: “It couldn’t be determined what new features Apple may offer to help justify price increases.” I can’t recount a single feature I’ve read about that would warrant any price increase on any iPhone model, and I’m positive the American people can see through Cook and his billionaire buddies’ cover for the Trump regime. The only reason for an iPhone price increase would be Trump’s tariffs, and if Apple is too cowardly to tell its customers that, it deserves a tariff-induced drop in sales.

If Apple really wants to cover for the Gestapo, it should shut up and keep the prices the same. Apple’s executives have taken the bottom-of-the-barrel approach to every single social, political, and business issue over the last five years, and they’re doing it again. Steve Jobs, despite his greed and indignation, always believed Apple’s ultimate goal should be to make the best products. Apple’s image was his top priority. Apple under Tim Cook, its current chief executive, has the exact opposite goal: to make the most money. Whether it’s screwing developers over or covering for the literal president of the United States, who should be able to play politics by himself, Cook’s Apple has taken every shortcut possible to undercut Apple’s goal of making the best technology in the world. How does increasing prices help Apple make better products? How does it increase Apple’s profit? How does disguising the reason for those price increases restore users’ faith in Apple as a brand?

It doesn’t seem like Cook cares. In hindsight, it makes sense coming from a guy who cozies up to communist psychopaths in China who openly use back doors Apple constructs for Chinese customers to spy on ordinary citizens. Spineless coward.

2027, check. 2025, check. Let’s talk 2026. Juli Clover, reporting for MacRumors (because I’m too cheap to pay for The Information):

Starting in 2026, Apple plans to change the release cycle for its flagship iPhone lineup, according to The Information. Apple will release the more expensive iPhone 18 Pro models in the fall, delaying the release of the standard iPhone 18 until the spring.

The shift may be because Apple plans to debut a foldable iPhone in 2026, which will join the existing iPhone lineup. The fall release will include the iPhone 18 Pro, the iPhone 18 Pro Max, an iPhone 18 Air, and the new foldable iPhone.

I think this makes sense. No other product line (aside from the Apple Watch, an accessory) in Apple’s lineup has all of its devices released during the same event. Apple usually releases consumer-level Mac laptops and desktops in the spring and pro-level ones in the summer and fall. The same goes for the iPads, which usually alternate between the iPad Pro and iPad Air due to the iPad’s irregular release schedule. The September iPhone event is Apple’s most-watched event by a mile and replicating that demand in the spring could do wonders for Apple’s other springtime releases, like iPads and Macs. Apple’s iPhone line is about to become much more complicated, too, with a thin version and a folding one coming in the next few years, so bifurcating the line into two distinct seasons would clean things up for analysts and reporters, too.

I also think the budget-friendly iPhone, formerly known as the SE, should move to an 18-month cycle. I dislike it when the low-end iPhone stands out as the old, left-behind model, especially when the latest budget iPhone isn’t a very good deal (it almost never is), but I also think it’s too low-end to be updated every spring. An alternating spring-fall release cycle would be perfect for one of Apple’s least-best-selling iPhone models.

On Eddy Cue’s U.S. v. Google Testimony

Mark Gurman, Leah Nylen, and Stephanie Lai, reporting for Bloomberg:

Apple Inc. is “actively looking at” revamping the Safari web browser on its devices to focus on AI-powered search engines, a seismic shift for the industry hastened by the potential end of a longtime partnership with Google.

Eddy Cue, Apple’s senior vice president of services, made the disclosure Wednesday during his testimony in the US Justice Department’s lawsuit against Alphabet Inc. The heart of the dispute is the two companies’ estimated $20 billion-a-year deal that makes Google the default offering for queries in Apple’s browser…

“We will add them to the list — they probably won’t be the default,” he said, indicating that they still need to improve. Cue specifically said the company has had some discussions with Perplexity.

“Prior to AI, my feeling around this was, none of the others were valid choices,” Cue said. “I think today there is much greater potential because there are new entrants attacking the problem in a different way.”

There are multiple points to Cue’s words here:

-

Cue ultimately intended for his testimony to prove that Google faces competition on iOS, and that artificial intelligence search engines complicate the dynamic, thus negating any anticompetitive effects of the deal. I’m skeptical that argument will work. It sounds like a joke. “This deal does nothing, so you should ignore it and let us get our $20 billion.” Convincing!

-

Implicitly, Cue is describing a future for iOS where more search engines will be added to Safari, but he also rules out the possibility that Safari allows any developer to set their search engine as the default. When someone types a query into the “Smart Search” field in Safari, it creates a URL with custom parameters. For example, if I typed “hello” into Safari with Google as my default search engine, Safari would just navigate to the URL

https://www.google.com/search?q=hello, perhaps with some tracking parameters to let Google know Safari is the referrer. Apple could let any developer expose their own parameters to Safari to extend this to any search engine (like Kagi), but if Cue is to be believed, it probably doesn’t have any plan to because it makes a small commission on the current search engines’ revenue1. -

Cue seems disinterested in describing how Apple would handle a scenario where its search deal with Google is thrown away. There was no mention of choice screens.

Bloomberg’s framing of the new search engines as a “revamp” is disingenuous. From Cue’s testimony, Apple seems to be in talks with Perplexity to add it to the model picker, presumably with some revenue-sharing agreement like it has with DuckDuckGo, Bing, and Yahoo. This is, however, different from a potential deal to integrate Gemini, Claude, and any other models into Siri and Apple Intelligence’s Writing Tools suite, which Sundar Pichai, Google’s chief executive, is eager to do. I presume Cue is weary of discussing those potential deals in court because the judge might shut them down, too. While OpenAI didn’t pay Apple anything to be placed in iOS (and vice versa), I think Apple would demand something from Google, or perhaps the opposite. Google is a very different company from OpenAI.

Technology is changing fast enough that people may not even use the same devices in a few years, Cue said. “You may not need an iPhone 10 years from now as crazy as it sounds,” he said. “The only way you truly have true competition is when you have technology shifts. Technology shifts create these opportunities. AI is a new technology shift, and it’s creating new opportunities for new entrants.”

Cue said that, in order to improve, the AI players would need to enhance their search indexes. But, even if that doesn’t happen quickly, they have other features that are “so much better that people will switch.”

Of course Cue would be the one to say this, as Apple’s services chief, but I just don’t buy it. Where is this magical AI supposed to run — in thin air? The iPhone is a hardware product and AI — large language models or whatever comes out in 10 years — is software. Apple must make great hardware to run great software, per Alan Kay, the computer scientist Steve Jobs quoted onstage during the evergreen 2007 iPhone introduction keynote. Maybe Cue imagines people will run AI on their Apple Watches or some other wearable device in the distant future, but those will never replace the smartphone. Nothing will ever beat a large screen in everyone’s pocket.

Cue is correct to assert that AI caused a major shakeup in the search engine and software industry. He should know that because Apple is arguably the only laggard in the industry — Apple Intelligence, which Cue is partially responsible for, is genuinely some of the worst software Apple has shipped in years. But the reason Apple is even floated as a possible entrant in the race to AI is because of the iPhone, a piece of hardware over a billion people carry with them everywhere. Jobs was right to plan iOS and the iPhone together — software and hardware in Apple products are inseparable, and the iPhone is Apple’s most important hardware product. The iPhone isn’t going anywhere.

Some pundits have brushed off Cue’s words as speculation, which is naïve. If this company is sending senior executives to spitball in court, it really does deserve some of its employees going to jail for criminal contempt. I think Apple is done lying to judges and this is indicative of some real conversations happening at Apple. Tim Cook, Apple’s chief executive, is eager to find a way to close his stint at Apple out with a bang, and it appears his sights are set on augmented reality, beginning with Apple Vision Pro and eventually extending with some form of AR glasses powered by AI. That’s a long shot, and even if it succeeds, it won’t replace the iPhone. There’s something incredibly attractive to humans about being lost in a screen that just isn’t possible with any other form of auxiliary technology. Pocket computers are the future of AI.

For a real-life testament to this, just look at the App Store’s Top Apps page. ChatGPT is the first app on the list. While Apple the company and its software division is losing the race to AI, the iPhone is winning. People are downloading the ChatGPT app and subscribing to the $20 monthly ChatGPT Plus tier, giving 30 percent to Apple on every purchase without Apple lifting a finger. The most powerful AI-powered device in the world is the iPhone (or maybe the Google Pixel).

-

I put out a post asking for confirmation about this because all of the LLM search tools gave me different answers. Claude and Perplexity said no, Gemini couldn’t give me proper sources, and only ChatGPT o3 was able to pull the Business Insider article, which I eventually deemed trustworthy enough to rely on. (Gemini, meanwhile, only cited an Apple Discussions Forum post from 2016.) Traditional Google Search failed entirely, and if I hadn’t probed the better ChatGPT model — or if I didn’t have a lingering suspicion the revenue-sharing agreements existed — I would’ve missed this detail. The web search market has lots of new competition, but all the competition is terrible. (Links to my Gemini 2.5 Pro, ChatGPT o3, Claude 3.7 Sonnet, and Perplexity chats here.) ↩︎

It’s Here: A ‘Get Book’ Button in the Kindle App

Andrew Liszewski, reporting for The Verge:

Contrary to prior limitations, there is now a prominent orange “Get book” button on Kindle app’s book listings…

Before today’s updates, buying books wasn’t a feature you’d find in the Kindle mobile app following app store rule changes Apple implemented in 2011 that required developers to remove links or buttons leading to alternate ways to make purchases. You could search for books that offered samples for download, add them to a shopping list, and read titles you already own, but you couldn’t actually buy titles through the Kindle or Amazon app, or even see their prices.

To avoid having to pay Apple’s 30 percent cut of in-app purchases, and the 27 percent tax on alternative payment methods Apple introduced in January 2024, Amazon previously required you to visit and login to its online store through a device’s web browser to purchase ebooks on your iPhone or iPad, which were then synchronized to the app. It was a cumbersome process compared to the streamlined experience of buying ebooks directly on a Kindle e-reader.

Further commentary from Dan Moren at Six Colors:

How long this new normal will last is anyone’s guess, but again, though Apple has already appealed the court’s decision, it’s hard to imagine the company being able to roll this back—the damage, in many ways, is already done and to reverse course would look immensely and transparently hostile to the company’s own customers: “we want your experience to be worse so we get more of the money we think we deserve.” Not a great look.

Just as Moren writes, if Apple really does win on appeal and gets to revert the changes it made last week, there should be riots on the streets of Cupertino. Apple’s primary argument for In-App Purchase, its bespoke system for software payments, is that it’s more secure and less misleading than whatever dark patterns app developers may try to employ, but that argument is moot because developers have always been able to (exclusively) offer physical goods and services via their own payment processors. Uber and Amazon, as preeminent examples, do not use IAP to let users book rides or order products. That doesn’t make them any less secure or more confusing than an app that does use IAP.

No matter how payments are collected, the broad App Store guidelines apply: apps cannot promote scams or steal money from customers. That’s just not allowed in the store, regardless of whether a developer uses IAP or their own payment processor. The processor and business model are separately regulated parts of the app and have been since the dawn of the App Store. That separation should extend to software products, like e-books or subscriptions, too. If an app is promoting a scam subscription or (lowercase) in-app purchase, it should be taken down, not because it didn’t use IAP, but because it’s promoting a scam. I don’t trust Apple with my credit card number any more than I do Amazon.

If Apple reverses course and decides to kill the new Kindle app (among many others) if it wins on appeal, it will probably be the stupidest thing Tim Cook, the company’s chief executive, will ever do. The worst part is that I wouldn’t even put it past him. Per the judge’s ruling last week, Cook took the advice of a liar who’s about to be sent to prison for lying under oath and Luca Maestri, his chief financial officer, over Phil Schiller, the company’s decades-long marketing chief and protégé of Steve Jobs. Schiller is as smart an Apple executive as they come — he’s staunchly pro-30 percent fee and anti-Epic Games, but he follows the law. He knows when something would go too far, and he’s always aware of Apple’s brand reputation.

When Cook threw the Mac into the garbage can just before the transition to Apple silicon, Schiller invited a group of Mac reporters to all but state outright that Pro Macs would come. The Mac Pros were burning up, the MacBook Pros had terrible keyboards, and all of the iMacs were consumer-grade, yet Schiller successfully convinced those reporters that new Pro Macs would exist and that the Mac wasn’t forgotten about. Schiller is the last remaining vestige of Jobs-era Apple left at the company, and it’s so disheartening to hear that Cook decided to trust his loser finance people instead of someone with a genuine appreciation and respect for the company’s loyal users.

All of this is to say that Cook ought to get his head examined, and until that’s done, I have more confidence in the legal system upholding what I believe was a rightful ruling than Apple doing what’s best for its users. It’s a sad state of affairs down there in Cupertino.

Judge in Epic Games v. Apple Case Castigates Apple for Violating Order

Josh Sisco, reporting for Bloomberg:

Apple Inc. violated a court order requiring it to open up the App Store to outside payment options and must stop charging commissions on purchases outside its software marketplace, a federal judge said in a blistering ruling that referred the company to prosecutors for a possible criminal probe.

US District Judge Yvonne Gonzalez Rogers sided Wednesday with Fortnite maker Epic Games Inc. over its allegation that the iPhone maker failed to comply with an order she issued in 2021 after finding the company engaged in anticompetitive conduct in violation of California law.

Gonzalez Rogers also referred the case to federal prosecutors to investigate whether Apple committed criminal contempt of court for flouting her 2021 ruling…

Epic Games Chief Executive Officer Tim Sweeney said in a social media post that the company will return Fortnite to the US App Store next week.

To hide the truth, Vice-President of Finance, Alex Roman, outright lied under oath. Internally, Phillip Schiller had advocated that Apple comply with the Injunction, but Tim Cook ignored Schiller and instead allowed Chief Financial Officer Luca Maestri and his finance team to convince him otherwise. Cook chose poorly.

The Wednesday order by Judge Gonzalez Rogers undoes essentially every triumph Apple had in the 2021 case, which ended early last year after the Supreme Court said it wouldn’t hear Epic’s appeal. The judge sided with Apple on practically every issue Epic sued over and only ordered the company to make one change: to allow external payment processors in the App Store. Apple begrudgingly applied in the most argumentative way possible: by charging a 27 percent fee on transactions made outside the App Store and forcing developers who used the program to report their sales to Apple every month to ensure they were following the rules. Epic didn’t like that — because it’s purely nonsensical — so it took Apple back to court, alleging it violated the court order. Judge Gonzalez Rogers agrees.

The judge’s initial order allowed Apple to keep Epic off the App Store by revoking its developer license and even forced Epic to pay Apple millions of dollars in legal fees because she ruled Epic’s lawsuit was virtually meritless. That case was a win for Apple and only required that it extend its reader app exemption — which allows certain apps to use external payment processors without any fees — to all apps, including games. The court found that Apple only providing that exemption to reader apps is anticompetitive and forced Apple to open it up to everyone, which it didn’t. It’s a frustrating own-goal self-inflicted by Apple and nobody else.

For the record, I still think Apple shouldn’t legally be compelled to allow external payment processors, but I also think they ought to do it, as it’s a small concession for major control over the App Store. Forcing developers to use Apple’s in-house payment processing system, In-App Purchase, is called “anti-steering,” and both the European Union and the United States have litigated it extensively. The optics of it are terrible: There’s sound business reasoning that Apple should be able to charge 15 to 30 percent per sale when developers use IAP, but if a developer doesn’t want to pay the commission, it should be able to circumvent it by using an external payment processor moderated by Apple. I really do understand both sides of the coin here — Apple thinks external payment processors are unsafe while developers yearn for more control — but I ultimately still think Apple should let this slide.

I’m not saying Apple shouldn’t regulate external processors in App Store apps. It should, but carefully. Many pundits, including Sweeney himself, have derided Apple’s warnings when linking to an external website as “scare screens,” but I think they’re perfectly acceptable. It’s Apple’s platform, and I think it should be able to govern it as it wants to protect its users. There are many cases of people not understanding or knowing what they’re buying on the web, and IAP drastically decreases accidental purchases in iOS apps. But it should be a choice for every developer to make whether or not they use IAP and give 30 percent to Apple or make more money while running the risk of irritating users. The bottom line is that Apple can still continue to exert control over how those payment processors work and how they’re linked to just by giving up the small financial kickback.

Apple last year got to make a choice: It could either cede control over payment processors and continue the rent-seeking behavior, or it could keep the rent and lose control. It chose the latter option, and on Wednesday, it lost its control. What a terrible own-goal. It lost the legal fight, lost its control, lost its rent, and now has to let its archenemy back on its platform. This is false; read the update for more on this. This is the result of years of pettiness, and while I could quibble about Judge Gonzalez Rogers’ ruling and how it might be too harsh — I don’t think it is — I won’t because Apple’s defiance is petulant and embarrassing.

Update, May 1, 2025: I’m ashamed I didn’t realize this when I wrote this post on Wednesday, but Apple is under no obligation to let Epic or Fortnite back on the App Store. John Gruber pointed this oversight out on Daring Fireball:

None of this, as far as I can see, has anything to do with Epic Games or Fortnite at all, other than that it was Epic who initiated the case. Give them credit for that. But I don’t see how this ruling gets Fortnite back in the App Store. I think Sweeney is just blustering — he wants Fortnite back in the App Store and thinks by just asserting it, he can force Apple’s hand at a moment when they’re wrong-footed by a scathing federal court judgment against them.

Sweeney is a cunning borderline criminal mastermind, and I’m embarrassed I didn’t catch this earlier. Of course he’s blustering — the ruling says nothing about Epic at all, only that Apple violated the court’s first order in 2021. I read most of the ruling Wednesday night as it came out, but seemingly overlooked this massive detail and took Sweeney at his word after I read his post on X. I shouldn’t have done that. Apple is still under no obligation to bring Epic back on the store, it hasn’t said anything about reinstating Epic’s developer license in its statement after the ruling, and Sweeney’s “We’re bringing Fortnite back this week” statement is a fantastical (and apparently successful) attempt to get in the news again and offer Apple a “peace deal.”

I think it’s also a failure on journalists’ part not to report this blatant mockery of the legal system. Yes, Apple was admonished severely by the court on Wednesday, absorbing a major hit to its reputation, but that shouldn’t distract from the fact that Sweeney is a liar and always has been. His own company got caught flat-footed by the Federal Trade Commission years ago for tricking people into buying in-game currency. Sweeney’s words shouldn’t be taken at face value, especially when he’s got nothing to prove his far-fetched idea that “Fortnite” somehow should be able to return to the App Store “next week.” Seriously, this post is so brazen, it makes me want to bleach my eyes:

We will return Fortnite to the US iOS App Store next week.

Epic puts forth a peace proposal: If Apple extends the court’s friction-free, Apple-tax-free framework worldwide, we’ll return Fortnite to the App Store worldwide and drop current and future litigation on the topic.

I can’t believe I fell for this. I can’t believe any journalist fell for this.

Forcing a Chrome Divestiture Ignores the Real Problem With Google

Monopolies aren’t illegal. Anticompetitive business conduct is.

It seems like everyone and their dog wants to buy Google Chrome after Google lost the search antitrust case last year and the Justice Department named a breakup as one of its key remedies. I wrote shortly after the company lost the case that a Chrome divestiture wouldn’t actually fix the monopoly issue because Chrome itself is a monopoly, and simply selling it would transfer ownership of that monopoly to another company overnight. And if Chrome spun out and became its own company, it wouldn’t even last a day because the browser itself lacks a business model. My bottom line in that November piece was that Google ultimately makes nothing from Chrome and that the real money-maker is Google Search, which everyone already uses because it’s the best free search engine on the web. The government, and Judge Amit Mehta, who sided with the government, disagree with the last part, but I still think it’s true.

Of course, everyone wants to buy Chrome because everyone wants to be a monopolist. OpenAI, in my eyes, is perhaps the most serious buyer, knowing the amount of capital it has and how much it has to gain from owning the world’s most popular web browser. Short-term, it would be marvelous for OpenAI, and that’s ultimately all it cares about. OpenAI has never been in it for the long run. It isn’t profitable, it isn’t even close to breaking even, and it essentially acts as a leech on Microsoft’s Azure servers. Sending all Chrome queries through ChatGPT would melt the servers and probably cause the next World War because of some nonsense ChatGPT spewed, but OpenAI doesn’t care. Owning Chrome would make OpenAI the second-most important company on the web, only second to Google, which would still control Google Search, the world’s most visited website. The latter half is exactly why it doesn’t make a modicum of logical sense to divest Chrome.

What would hurt Google, however, would be forcing a divestiture of Google Search, or, in a perhaps more likely scenario, Google Ads, which also works as a monopoly over online advertising. I think eliminating Google’s primary source of revenue overnight would be extremely harsh, but maybe it’s necessary. Google Search has become one of the worst experiences on the web recently, and I wouldn’t mind if it became its own company. I think it would be operated better than Google, which seems aimless and poorly managed. It could easily strike a deal with the newly minted ad exchange and platform that would also be spun off into an attractive place to sell ads while breaking free from the chains of Google’s charades. That’s good antitrust enforcement because it significantly weakens a monopoly while allowing a new business to thrive independently. Sure, Search would still be a monopoly when spun off by itself, but it would have an incentive to become a better product. Google is an advertising company, not a search company, and that allowed Search to stagnate. This is why monopolies are dangerous — because they cause stagnation and eliminate competition simultaneously.

I’m conflating both of these Google cases intentionally because they work hand in hand. Google Search is profitable because of Google’s online advertising stronghold; Google can sell ads online thanks to the popularity of Search. The government could either force Google to sell one or both of these businesses. Both might be too excessive, but I think it still would be viable because it would force Google to begin innovating again. Its primary revenue streams would be Google Workspace, YouTube, Android, and Google Cloud, and those are four very profitable businesses with long-term success potential, even without the ad exchange. Google would be forced to do what every other company on the web has been doing for decades: buy and sell ads. While it wouldn’t own the ad exchange anymore, it could still sell ads on YouTube. It’s just that those ads would have to be a good bang for the buck because they wouldn’t be the only option anymore. If an advertiser didn’t like the rates YouTube was charging, they could go spend their money on the newly spawned independent search engine. This way, Google could no longer enrich its other businesses with one monopoly.

All of this brainstorming makes it increasingly obvious that forcing Google to sell Chrome does nothing to break apart Google’s monopoly. It only punishes the billions of people who use Chrome and gets a nice dig in at Google’s ego. I’m hard pressed to see how those are “remedies” after the most high-profile antitrust lawsuit since United States v. Microsoft decades earlier. Chrome acts as a funnel for Google Search queries, and untying those is practically impossible. This is where the Justice Department’s logic falls apart: It thinks Search is popular because of some shady business tactics on Google’s part. While those shady practices — that Google definitely indeed did, according to the court — may have contributed to Search’s prominence, they don’t account for the successes of Google’s search product. For years, it really did seem like magic. The issue now is that it doesn’t, and that nobody else can innovate anymore because of Google’s restrictive contracts. The culprit has never been that Google Search is popular, Google Chrome is popular, or that Google makes too much money; the issue is that Google blocks competition from entering the market via lucrative search exclusivity deals.

Breaking up Google is a sure-fire way to eliminate the possibility of these contracts, but bringing Chrome up in the conversation ignores why Google lost this case in the first place. While Chrome might have once been how Search got so popular, it isn’t anymore. People use Google Search in Safari, Edge, Firefox — every single browser. If Chrome was a key facet of Search’s success, that isn’t illegal, monopolistic, or even anti-consumer. It’s just making a good product and using the success of that product to help another one grow, also known as business. Crafting a search engine and a cutting-edge browser to send people to that search engine isn’t an exclusivity contract that prevents others from gaining a competitive advantage, and forcing Google to sell Chrome off is a nonsensical misunderstanding of the relationship between Google’s products. The core problem here is not Chrome, it’s Google Search, and the Justice Department needs to break Search’s monopoly in some meaningful way that doesn’t hurt consumers. That could be calling off contracts, forcing Google to sell Search, or forcing it to open up its search index to competitors. Whatever it is, the remedy must relate to the core product.

The Justice Department, or really anyone who cares about this case, must understand that Google Search is overwhelmingly popular because it’s a good product. The way it bolstered that product is at the heart of the controversy, and eliminating those cheap-shot ways Google continues to elevate itself in the market is the Justice Department’s job, but ultimately, nobody will stop using Google. Neither should anyone stop using it — people should use whatever search engine they like the most, and boosting competitors is not the work of the Justice Department. Paving the way for competition to exist, however, is, and the current search market significantly lacks competition because Google prevents any other company from succeeding. That is what the court found. It (a) found that Google is a monopolist in the search industry, but (b) also found Google has illegally maintained that monopoly and that remedies are in order to prevent that illegal action. It isn’t illegal to be a monopolist in the United States, unlike some other jurisdictions. It is illegal, however, to block other companies from fairly competing in the same space. The Justice Department is regulating like being a monopolist is illegal, when in actuality, it should focus its efforts on ensuring that Google’s monopoly is organically built from now on.

Part of the blame lies on Google’s lawyers, but it isn’t too late for them to pick up the pace. They can’t defend their ludicrous search contracts anymore, but they can make the case for why they shouldn’t exist anymore. If we’re being honest, the best possible outcome for Google here is if it just gets away with ending the contracts and is allowed to keep all of its businesses and products. That’s because it doesn’t rely on those contracts anymore to stay afloat. Google’s legal strategy in this case — the one that led to its loss — is that it tried to convince the court that its search contracts were necessary to continue doing business so competitively, when that’s an absolutely laughable thing to say about a product that owns nearly 90 percent of the market. Judge Mehta didn’t buy that argument because it’s born out of sheer stupidity. Instead, its argument should’ve begun by conceding that the contracts are indeed unnecessary and proving over the trial that Google Search is widespread because it’s a good product. It could point to Bing’s minuscule market share despite its presence as the default search engine on Windows. That’s a real point, and Google blew it.

If Google offers the ending of these contracts as a concession, that would be immensely appealing to the court. It might not be enough for Google to run away scot-free, but it would be something. If it, however, continues to play the halfwitted game of hiding behind the contracts, it probably will lose something much more important. As for what that’ll be, my guess is as good as anyone else’s, but I find it hard to imagine a world where Judge Mehta agrees to force Google to sell Chrome. That decision would be purely irrational and wouldn’t jibe with the rest of his rulings, which have mainly been rooted in fact and appear to have citizens’ interests first. Moreover, I don’t think the government has met the burden of proving a Chrome divestiture would make a meaningful dent in Google’s monopoly, and neither do I believe it has the facts to do so.

The contracts are almost certainly done for, though, and for good reason. In practice, I think this will mean more search engine ballots, i.e., choice screens that appear when a new iPhone is set up or when the Safari app is first opened, for example. Most people there will probably still pick Google, just like they do on Windows, much to Microsoft’s repeated chagrin, and there wouldn’t be anything stopping Apple and other browser makers from keeping Google as the default. I wouldn’t even put it past Apple, which I still firmly believe thinks Google Search is the best, most user-intuitive search engine for Apple devices. If Eddy Cue, Apple’s services chief, thought Google wasn’t very good and was only agreeing to the deal for the money, I believe he would’ve said so under penalty of perjury. He didn’t, however — he said Google was the best product, and it’s tough to argue with him. And for the record, I don’t think Apple will ever make its own search engine or choose another default other than Google — it’ll either be Google or a choice screen, similar to the European Union. (I find the choice screens detestable and think every current browser maker should keep Google as the default for simplicity’s sake, proving my point that the contracts are unneeded.)

I began writing this nearly 2,000 words ago to explain why I think selling Chrome is a short-sighted idea that fails to accomplish any real goals. But more importantly, I believe I covered why Google is a monopolist in the first place and how it even got to this situation. My problem has never been that Google or any other company operates a monopoly, but rather, how Google maintained that stronghold is disconcerting. Do people use Google Search of their own volition? Of course they do, and they won’t be stopping anytime soon. But is it simultaneously true that the search stagnation and dissatisfaction we’ve had with Google Search results over the past few years is a consequence of Google’s unfair business practices? Absolutely, and it’s the latter conclusion the Justice Department needs to fully grok to litigate this case properly. Whatever remedy the government pursues, it needs to make Google feel a flame under itself. Historically, the most successful method for that has been to elevate the competition, but when the others are so far behind, it might just be better to weaken the search product temporarily to force Google to catch up and innovate along the way.

Apple Plans to Assemble All U.S. iPhones in India by 2026

Michael Acton, Stephen Morris, John Reed, and Kathrin Hille, reporting for the Financial Times:

Apple plans to shift the assembly of all US-sold iPhones to India as soon as next year, according to people familiar with the matter, as President Donald Trump’s trade war forces the tech giant to pivot away from China.

The push builds on Apple’s strategy to diversify its supply chain but goes further and faster than investors appreciate, with a goal to source from India the entirety of the more than 60mn iPhones sold annually in the US by the end of 2026.

The target would mean doubling the iPhone output in India, after almost two decades in which Apple spent heavily in China to create a world-beating production line that powered its rise into a $3tn tech giant.

This is really important news and I’m surprised I haven’t heard much chatter about it online. China is the best place to manufacture iPhones en masse because the country effectively has an entire city dedicated to making them 24 hours a day, 365 days a year. Replicating that supply chain anywhere else has been extremely difficult for Apple for obvious reasons — it’s nearly impossible to find such a dedicated workforce anywhere else in the world. American commentators usually frame things in terms of five-day work weeks or eight-hour shifts, but in China, they just don’t have limits. This system is so bad that Foxconn, Apple’s manufacturer, resorts to putting anti-suicide nets around the buildings that house these poor workers, but this isn’t an essay on how the marriage between capitalism and communism is used for human exploitation.

Building the iPhone infrastructure in India is a monumental task. Apple has already gotten started, but it isn’t good enough for peak iPhone season, i.e., when the phones first come out in September. Anyone who buys an iPhone in the United States on pre-order day will see a shipping notification from China, not Brazil or India. Apple begins manufacturing phones in other countries months later because they’re not equipped to handle the demand of American consumers leading up to the holidays. I’m not saying Apple hasn’t built up infrastructure to handle this demand in the past few years — it has — but there’s still a lot of work to be done, and I’m not sure how it will do it in a year. Either way, this is perfectly suited for Tim Cook, Apple’s chief executive, who is one of the few people with the operational prowess to handle complexities like this.

As I said when I wrote about Trump’s tariffs earlier in April, the most alarming danger remains the prospect of a war between China and Taiwan. Apple can pay tariffs by raising prices or playing politics in Washington — it’s simply not as much of a pressing issue as the company’s entire supply chain being put on hold for however many years. Apple still relies on Taiwan’s factories for nearly all of its high-end microprocessors. Taiwan Semiconductor Manufacturing Company’s Arizona plant isn’t good enough and won’t be for a while. Apple is still heavily reliant on China for final assembly, and the sooner it can get out of these two countries, the better it is for Apple’s long-term business prospects.

Moving iPhone assembly to India, Mac and AirPods manufacturing to Vietnam, etc., is one large step to shielding Apple’s business from global instability. (With the possibility of a war in India looming, I’m not sure how large of a step it is.) But Apple’s dependence on Taiwan for nearly all of its processors is even more concerning. We can build microprocessors in the United States — we can’t build iPhones here. They’re different kinds of manufacturing. The quicker Apple gets the Trump administration to bless the Chips and Science Act, the better it is for Apple’s war preparedness plan, because I fully believe Apple’s largest manufacturing vulnerability is Taiwan, not China. (China was the biggest concern two years ago, but from this report, it’s not difficult to assume Apple is close to significantly decreasing its reliance on China.)

On OpenAI’s Model Naming Scheme

Hey ChatGPT, help me name my models

Good to see you too, ChatGPT.

Good to see you too, ChatGPT.

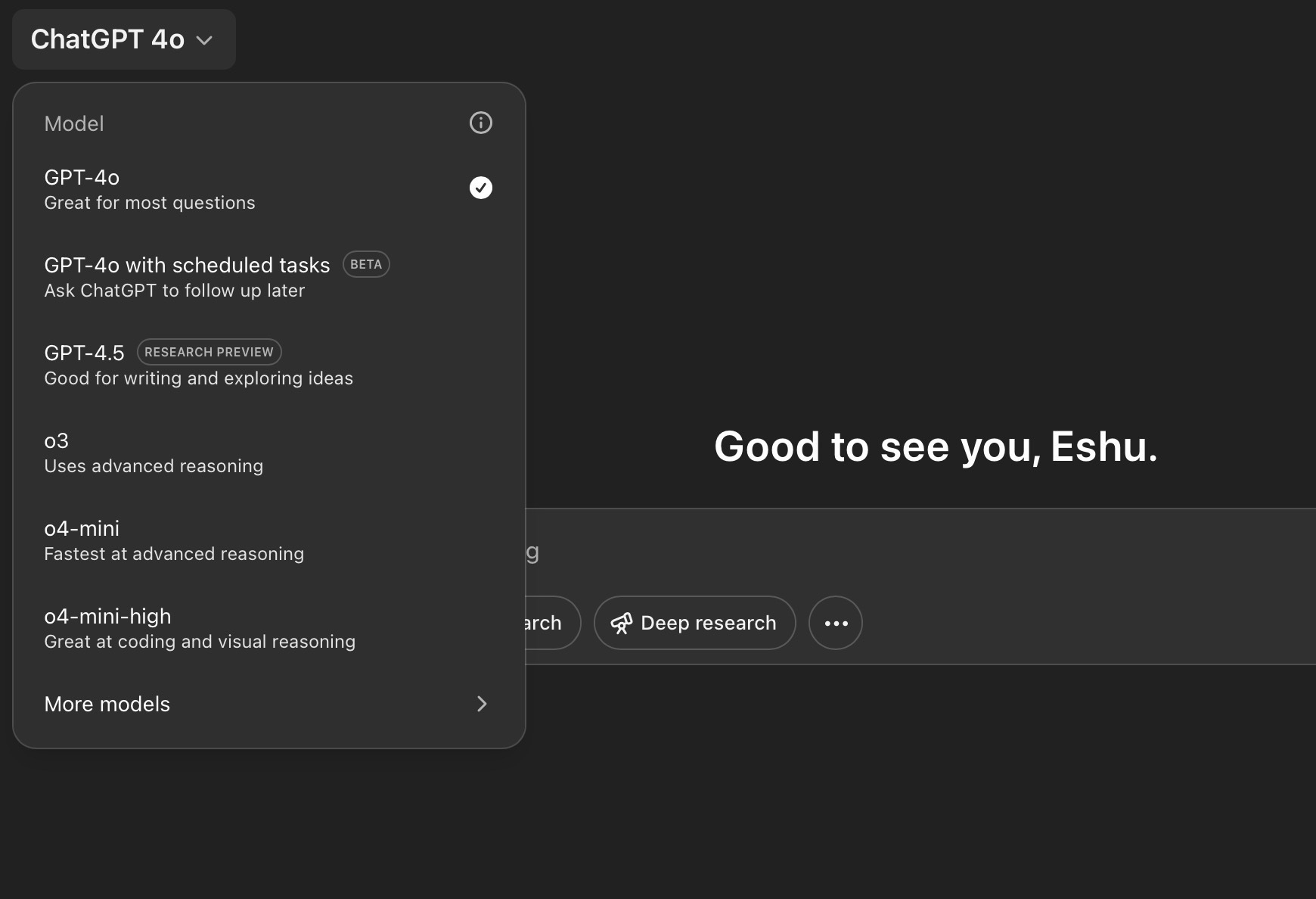

Last week, OpenAI announced two new flagship reasoning models: o3 and o4-mini, with the latter including a “high” variant. The names were met with outrage across the internet, including from yours truly, and for good reason. Even Sam Altman, the company’s chief executive, agrees with the criticism. But generally, the issue isn’t with the letters because it’s easy to remember that if “o” comes before the number, it’s a reasoning model, and if it comes after, it’s a standard “omnimodel.” “Mini” means the model is smaller and cheaper, and a dot variant is some iteration of the standard GPT-4 model (like 4.5, 4.1, etc.). That’s not too tedious to think about when deciding when to use each model. If the o is after the number, it’s good for most tasks. If it’s in front, the model is special.

The confusion comes between OpenAI’s three reasoning models, which the company describes like this in the model selector on the ChatGPT website and the Mac app:

- o3: Uses advanced reasoning

- o4-mini: Fastest at advanced reasoning

- o4-mini-high: Great at coding and visual reasoning

This is nonsensical. If the 4o/4o-mini naming is to be believed, the faster version of the most competent reasoning model should be o3-mini, but alas, that’s a dumber, older model. o4-mini-high, which has a higher number than o3, is a worse model in many, but not all, benchmarks. For instance, it earned a 68.1 percent in the software engineering benchmark OpenAI advertises in its blog post announcing the new models, while o3 scored 69.1 percent. That’s a minuscule difference, but it still is a worse model in that scenario. And that benchmark completely ignores o4-mini, which isn’t listed anywhere in OpenAI’s post; the company says “all models are evaluated at high ‘reasoning effort’ settings—similar to variants like ‘o4-mini-high’ in ChatGPT.”

Anyone looking at OpenAI’s model list would be led to believe o4-mini-high (and presumably its not-maxed-out variant, o4-mini) would be some coding prodigy, but it isn’t. o3 is, though — it’s the smartest of OpenAI’s models in coding. o3 also excels in “multimodal” visual reasoning over o4-mini-high, which makes the latter’s description as “great at… visual reasoning” moot when o3 does better. OpenAI, in its blog post, even says o3 is its “most powerful reasoning model that pushes the frontier across coding, math, science, visual perception, and more.” o4-mini only beats it in the 2024 and 2025 competition math scores, so maybe o4-mini-high should be labeled “great at complex math.” Saying o4-mini-high is “great at coding” is misleading when o3 is OpenAI’s best offering.

The descriptions of o4-mini-high and o4-mini should emphasize higher usage limits and speed, because truly, that’s what they excel at. They’re not OpenAI’s smartest reasoning models, but they blow o3-mini out of the water, and they’re way more practical. For Plus users who must suffer OpenAI’s usage caps, that’s an important detail. I almost always query o4-mini because I know it has the highest usage limits even though it isn’t the smartest model. In my opinion, here’s what the model descriptions should be:

- o3 Pro (when it launches to Pro subscribers): Our most powerful reasoning model

- o3: Advanced reasoning

- o4-mini-high: Quick reasoning

- o4-mini: Good for most reasoning tasks

To be even more ambitious, I think OpenAI could ditch the “high” moniker entirely and instead implement a system where o4 intelligently — based on current usage, the user’s request, and overall system capacity — could decide to use less or more power. The free tier of ChatGPT already does this: When available, it gives users access to 4o over 4o-mini, but it gives priority access to Plus and Pro subscribers. Similarly, Plus users ought to receive as much o4-mini-high access as OpenAI can support, and when it needs more resources (or when a query doesn’t require advanced reasoning), ChatGPT can fall back to the cheaper model. This intelligent rate-limiting system could eventually extend to GPT-5, whenever that ships, effectively making it so that users no longer must choose between models. They still should be able to, of course, but just like the search function, ChatGPT should just use the best tool for the job based on the query.

ChatGPT could do with a lot of model cleanup in the next few months. I think GPT-4.5 is nearly worthless, especially with the recent updates to GPT-4o, whose personality has become friendlier and more agentic recently. Altman championed 4.5’s writing style when it was first announced, but now the model isn’t even accessible from the company’s application programming interface because it’s too expensive and 4.1 — whose personality has been transplanted into 4o for ChatGPT users — smokes it in nearly every benchmark. 4.5 doesn’t do anything well except write, and I just don’t think it deserves such a prominent position in the ChatGPT model picker. It’s an expensive, clunky model that could just be replaced by GPT-4o, which, unlike 4.5, can code and logic its way through problems with moderate competency.

Similarly, I truly don’t understand why “GPT-4o with scheduled tasks” is a separate model from 4o. That’s like making Deep Research or Search a new option from the picker. Tasks should be relegated to another button in the ChatGPT app’s message box, sitting alongside Advanced Voice Mode and Whisper. Instead of sending a normal message, task requests should be designated as so.

Of the major artificial intelligence providers, I’d say Anthropic has the best names, though only by a slim margin. Anyone who knows how poetry works should have a pretty easy time understanding which model is the best, aside from Claude 3 Opus, which isn’t the most powerful model but nevertheless carries the “best” name of the three (an opus refers to a long musical composition). Still, the hate for Claude 3.7 Sonnet and love for 3.5 Sonnet appear to add confusion to the lineup — but that’s a user preference unperturbed by benchmarks, which have 3.7 Sonnet clearly in the lead.

Gemini’s models appear to have the most baggage associated with them, but for the first time in Google’s corporate history, I think the company named the ones available through the chatbot somewhat decently. “Flash” appears to be used for the general-use models, which I still think are terrible, and “Pro” refers to the flagship ones. Seriously, Google really did hit it out of the park with 2.5 Pro, beating every other model in most benchmarks. It’s not my preferred one due to its speaking style, but it is smart and great at coding.

OpenAI Is Building a Social Network

Kylie Robison and Alex Heath, reporting for The Verge:

OpenAI is working on its own X-like social network, according to multiple sources familiar with the matter.

While the project is still in early stages, we’re told there’s an internal prototype focused on ChatGPT’s image generation that has a social feed. CEO Sam Altman has been privately asking outsiders for feedback about the project, our sources say. It’s unclear if OpenAI’s plan is to release the social network as a separate app or integrate it into ChatGPT, which became the most downloaded app globally last month. An OpenAI spokesperson didn’t respond in time for publication.

Only one thing comes to mind for why OpenAI would ever do this: training data. It already collects loads of data from queries people type into ChatGPT, but people don’t speak to chatbots the way they do other people. To learn the intricacies of interpersonal conversations, ChatGPT needs to train on a social network. GPT-4, and by extension, GPT-4o, was presumably already trained on Twitter’s corpus, but now that Elon Musk shut off that pipeline, OpenAI needs to find a new way to train on real human speech. The thing is, I think OpenAI’s X competitor would actually do quite well in the Silicon Valley orbit, especially if OpenAI itself left X entirely and moved all of its product announcements to its own platform. That might not yield quite as much training data as X or Reddit, but it would presumably be enough to warrant the cost. (Altman is a savvy businessman, and I really don’t think he’d waste money on a project he didn’t think was absolutely worth it.)

OpenAI might also position the network as a case study for fully artificial intelligence-powered moderation. If the site turns to 4chan, it really doesn’t benefit OpenAI unless it wants to create an alt-right persona for ChatGPT or something. (I wouldn’t put that past them.) Content moderation, as proven numerous times, is the most potent challenge in running a social network, and if OpenAI can prove ChatGPT is an effective content moderator, it could sell that to other sites. Again, Altman is a savvy businessman, and it wouldn’t be surprising to see the network be used as a de facto example of ChatGPT doing humans’ jobs better.

In a way, OpenAI already has a social network: the feed of Sora users. Everyone has their own username, and there’s even a like system to upvote videos. It’s certainly far from an X-like social network, but I think it paints a rough picture of what this project could look like. When OpenAI was founded, it was created to ensure AI is beneficial for all of humanity. In recent years, it seems like Altman’s company has abandoned that core philosophy, which revolved around publishing model data and safety information openly so outside researchers could scrutinize it and putting a kill switch in the hands of a nonprofit board. Those plans have evaporated, so OpenAI is trying something new: inviting “artists” and other users of ChatGPT to post their uses for AI out in the open.

The official OpenAI X account is mainly dedicated to product announcements due to the inherent seriousness and news value of the network, but the company’s Instagram account is very different. There, it posts questions to its Instagram Stories asking ChatGPT users how they use certain features, then highlights the best ones. OpenAI’s social network would almost certainly include some ChatGPT tie-in where users could share prompts and ideas for how to use the chatbot. Is that a good idea? No, but it’s what OpenAI has been inching toward for at least the past year. That’s how it frames its mission of benefiting humanity. I don’t see how the company’s social network would diverge from that product strategy Altman has pioneered to benefit himself and place his corporate interests above AI safety.

Stop Me if You’ve Heard This Before: iPadOS 19 to Bring New Multitasking

Mark Gurman, reporting just a tiny nugget of information on Sunday:

I’m told that this year’s upgrade will focus on productivity, multitasking, and app window management — with an eye on the device operating more like a Mac. It’s been a long time coming, with iPad power users pleading with Apple to make the tablet more powerful.

It’s impossible to make much of this sliver of reporting, but here’s a non-exhaustive timeline of “Mac-like” features each iPadOS version has included since its introduction in 2019:

- iPadOS 13: Multiple windows per app, drag and drop, and App Exposé.

- iPadOS 14: Desktop-class sidebars and toolbars.

- iPadOS 15: Extra-large widgets (atop iOS 14’s existing widgets).

- iPadOS 16: Stage Manager and multiple display support.

- iPadOS 17: Increased Stage Manager flexibility.

- iPadOS 18: Nothing of note.

Of these features, I’d say the most Mac-like one was bringing multiple window support to the iPad, i.e., the ability to create two Safari windows, each with its own set of tabs. It was way more important than Stage Manager, which really only allowed those windows to float around and become resizable to some extent, which is negligible on the iPad because iPadOS interface elements are so large. My MacBook Pro’s screen isn’t all that much larger than the largest iPad (1 inch), but elements in Stage Manager on the iPad feel noticeably more cramped on the iPad thanks to the larger icons to maintain touchscreen compatibility. From a multitasking standpoint, I think the iPad is now as good as it can get without becoming overtly anti-touchscreen. The iPad’s trackpad cursor and touch targets are beyond irritating for anything other than light computing use, and no number of multitasking features will change that.

This is completely out on a whim, but I think iPadOS 19 will allow truly freeform window placement independent of Stage Manager, just like the Mac in its native, non-Stage Manager mode. It’ll have a desktop, Dock, and maybe even a menu bar for apps to segment controls and maximize screen space like the Mac. (Again, these are all wild guesses and probably won’t happen, but I’m just spitballing.) That’s as Mac-like as Apple can get within reason, but I’m struggling to understand how that would help. Drag and drop support in iPadOS is robust enough. Context menus, toolbars, keyboard shortcuts, sidebars, and Spotlight on iPadOS feel just like the Mac, too. Stage Manager post-iPadOS 17 is about as good as macOS’ version, which is to say, atrocious. Where does Apple go from here?

No, the problem with the iPad isn’t multitasking. It hasn’t been since iPadOS 17. The issue is that iPadOS is a reskinned, slightly modified version of the frustratingly limited iOS. There are no background items, screen capture utilities, audio recording apps, clipboard managers, terminals, or any other tools that make the Mac a useful computer. Take this simple, first-party example: I have a shortcut on my Mac I invoke using the keyboard shortcut Shift-Command-9, which takes a text selection in Safari, copies the URL and author of the webpage, turns the selection into a Markdown-formatted block quote, and adds it to my clipboard. That automation is simply impossible on iPadOS. Again, that’s using a first-party app. Don’t get me started on live-posting an Apple event using CleanShot X’s multiple display support to take a screenshot of my second monitor and copy it to the clipboard or, even more embarrassingly for the iPad, Alfred, an app I invoke tens of times a day to look up definitions, make quick Google searches, or look at my clipboard history. An app like Alfred could never exist on the iPad, yet it’s integral to my life.

Grammarly can’t run in the background on iPadOS. I can’t open ChatGPT using Option-Space, which has become engrained into my muscle memory over the year it’s been available on the Mac. System-wide optical character recognition using TextSniper is impossible. The list goes on and on — the iPad is limited by the apps it can run, not how it displays them. I spend hours a day with a note-taking app on one side of my Mac screen and Safari on the other, and I can do that on the iPad just fine. But when I want to look up a definition on the Mac, I can just hit Command-Space and define it. When I need to get text out of a stubborn image on the web, there’s an app for that. When I need to run Python or Java, I can do that with a simple terminal command. The Mac is a real computer — the iPad is not, and some dumb multitasking features won’t change that.

There are hundreds of things I’ve set up on my Mac that allow me to do my work faster and easier than on the iPad that when I pick up my iPad — with a processor more powerful than some Macs the latest version of macOS supports — I feel lost. The iPad feels like a larger version of the iPhone, but one that I can’t reach all the corners of with just one hand. It lives in this liminal space between the iPhone and the Mac, where it performs the duties of both devices so poorly. It’s not handheld or portable at all to me, but it is absolutely not capable enough for me to do my work. The cursor feels odd because the interface wasn’t designed to be used with one. The apps I need aren’t there and never will be. It’s not a comfortable place to work — it’s like a desk that looks just like the one at home but where everything is just slightly misplaced and out of proportion. It drives me nuts to use the iPad for anything more than scrolling through an article in bed.

No amount of multitasking features can fix the iPad. It’ll never be able to live up to its processor or the “Pro” name. And the more I’ve been thinking about it, the more I’m fine with that. The iPad isn’t a very good computer. I don’t have much to do with it, and it doesn’t add joy to my life. That’s fine. People who want an Apple computer and need one to do their job should go buy a Mac, which is, for all intents and purposes, cheaper than an iPad Pro with a Magic Keyboard. People who don’t want a Mac or already have their desktop computing needs met should buy an iPad. As for the iPad Pro with Magic Keyboard, it sits in a weird, awful place in Apple’s product lineup where the only thing it has going for it is the display, which, frankly, is gorgeous. It is no more capable than a base-model iPad, but it certainly is prettier.

It’s time to stop wishing the iPad would do something it just isn’t destined to do. The iPad is not a computer and never will be.

Apple and the Tariffs

Apple transported five planes full of iPhones and other products from India to the US in just three days during the final week of March, a senior Indian official confirmed to The Times of India. The urgent shipments were made to avoid a new 10% reciprocal tariff imposed by US President Donald Trump’s administration that took effect on April 5. Sources said that Apple currently has no plans to increase retail prices in India or other markets despite the tariffs.

To mitigate the impact, the company rapidly moved inventory from manufacturing centres in India and China to the US, even though this period is typically a slow shipping season.

“Factories in India and China and other key locations had been shipping products to the US in anticipation of the higher tariffs,” according to one source.

The stock market made a return to normalcy on Wednesday afternoon after Trump postponed the tariffs for 90 days, but even though Apple is up 15 percent, it’s far from out of the water. Trump only canceled his latest round of reciprocal tariffs, but the Chinese ones don’t count under the same plan. Chinese imports are tariffed at 125 percent as of Wednesday morning. India, by comparison, is only tariffed at a measly 10 percent, which is much more palatable for Apple, which probably couldn’t afford to lose so much on iPhone imports into the United States, a market that accounts for nearly half of its revenue. So the plan makes sense, and Tim Cook, Apple’s chief executive, is once again flexing his supply chain prowess built up during his time as Apple’s chief operating officer. While smaller companies have been flat-out calling off imports into the United States, Apple just did a clever reroute. Nice.