Mark Zuckerberg’s Week of Being an Insecure Opportunist

Meta’s virtue signaler-in-chief has lots to say

Mark Zuckerberg, Meta’s founder, posted a long thread on Meta’s Twitter copycat, Threads, about updates to Meta’s content moderation policy, beginning a busy week for Meta employees and users alike. Here are my thoughts on what he said.

It’s time to get back to our roots around free expression and giving people voice on our platforms.

Great heavens.

1/ Replace fact-checkers with Community Notes, starting in the US.

As many others have said, I have never seen Meta fact-check posts that truly deserved fact-checking. It put a label on my thread saying Trump would win the election after the failed assassination attempt in Butler, Pennsylvania, but I’ve never seen a fact check implemented where it mattered. Community Notes, on the other hand, is phenomenal — albeit a stolen idea from Twitter’s Birdwatch, now X’s Community Notes. But the Zuckerberg of four years ago wouldn’t have decided to scrap fact-checking entirely — his instinct would’ve instead been to double-down and improve Meta’s machine learning to tag bad posts automatically. Meta is a technology company, and Zuckerberg has historically solved even its biggest social issues with more technology. To go all natural selection, “every man for himself” mode rings alarm bells.

Meta’s platforms suffer from severe misinformation, though probably not worse than the cesspool that is X. Facebook is inundated with some of the worst racism, sexism, misogyny, and hateful speech that consistently uses fake, fabricated information as “evidence” for its claims. President Biden’s administration admonished Meta — then Facebook — in 2021 for spreading vaccine misinformation; the president said the company was “killing people.” Twitter proactively removed most vaccine misinformation in 2021, but Meta sat on its hands until the Biden administration rang them up and asked them to take it down as it interfered with a crucial component of the government’s pandemic response. (More on this later.)

2/ Simplify our content policies and remove restrictions on topics like immigration and gender that are out of touch with mainstream discourse.

It’s hard to tell what Zuckerberg means from just this post alone, but Casey Newton at Platformer describes the changes well:

For example, the new policy now allows “allegations of mental illness or abnormality when based on gender or sexual orientation, given political and religious discourse about transgenderism and homosexuality and common non-serious usage of words like ‘weird.’”

So in addition to being able to call gay people insane on Facebook, you can now also say that gay people don’t belong in the military, or that trans people shouldn’t be able to use the bathroom of their choice, or blame COVID-19 on Chinese people, according to this round-up in Wired. (You can also now call women household objects and property, per CNN.) The company also (why not?) removed a sentence from its policy explaining that hateful speech can “promote offline violence.”

So, “out of touch with mainstream discourse” directly translates to being allowed to say “women are household objects.” Here’s an experiment for Zuckerberg, who has a wife and three daughters: Go to the middle of Fifth Avenue and shout, “Women are household slaves!” He’ll be punched to death, and that’ll be the end of his tenure as the world’s second-most annoying billionaire. But on Facebook, such speech is sanctioned by the platform owner — you might even be promoted for it because Zuckerberg seems keen on bringing more “masculine energy” to his company. That’s not “mainstream discourse”; it’s flat-out misogyny.

This is where it became apparent to me that Zuckerberg’s new speech policy — which, according to The New York Times, he whipped up in weeks without consulting his staff after a retreat to Mar-a-Lago, President-elect Donald Trump’s home — is meant to be awful. It was engineered to be racist, sexist, and homophobic. It wasn’t created in the interest of free speech; it’s a capitulation to Trump and his supporters. The relationship between the president-elect and Zuckerberg has been tenuous, to put it lightly, but the new content policy is designed to repair it.

Trump has threatened Zuckerberg with jail time on numerous occasions for donating millions of dollars to a non-profit voting initiative in 2020 to help people cast ballots during the pandemic. (Republicans have called the program “Zuckerbucks” and have ripped into it on every possible occasion.) Facebook deplatformed him after his coup attempt on January 6, 2021, after he spread misinformation about the election results that year, and that enraged Trump, who vowed to go after “Big Tech” companies in his second term. Trump now has the power to ruin Meta’s business, and Zuckerberg wants to be on his good side after noticing how Elon Musk did the same after his acquisition of Twitter. The “Make America Great Again” crowd values transphobia and homophobia like no other virtue, so the best way to virtue signal1 to the incoming administration is to stand behind the systemic hatred of vulnerable people.

I wouldn’t consider Zuckerberg a right-winger; I just think he’s a nasty, good-for-nothing grifter. He’s an opportunist at heart, as perfectly illustrated by Tim Sweeney, Epic Games’ chief executive, in perhaps the best the-worst-person-you-know-made-a-great-point post I’ve ever encountered:

After years of pretending to be Democrats, Big Tech leaders are now pretending to be Republicans, in hopes of currying favor with the new administration. Beware of the scummy monopoly campaign to vilify competition law as they rip off consumers and crush competitors.

The second Washington flips to Democrats, Zuckerberg will be back on the “Zuckerbucks” train once again, standing up for democracy and human rights in name only. In truth, he only has one initiative: to make the most money possible. The Biden administration has made accomplishing that goal very difficult for poor Zuckerberg, and it hasn’t stood up for American companies after the European Union’s lawfare against Big Tech, so the latest changes to Meta’s content moderation are meant to curry favor with violent criminals in the Trump administration — including Trump, himself, a violent criminal. So, the changes aren’t about adapting to social acceptability; rather, they conform to MAGA’s most consistent viewpoint: that all gay people are subhuman and women are objects.

3/ Change how we enforce our policies to remove the vast majority of censorship mistakes by focusing our filters on tackling illegal and high-severity violations and requiring higher confidence for our filters to take action.

Word salad, noun: “a confused or unintelligible mixture of seemingly random words and phrases.”

4/ Bring back civic content. We’re getting feedback that people want to see this content again, so we’ll phase it back into Facebook, Instagram and Threads while working to keep the communities friendly and positive.

During campaigning season, Adam Mosseri, Instagram’s chief executive and head of Threads, said politics would explicitly never be promoted again on Meta’s platforms because it was inherently decisive. Threads was founded with the goal of de-emphasizing so-called “hard news” in text-based social media, much to the chagrin of its users who, for years at this point, have been begging Meta to flip the switch and stop down-ranking links and news. But now that the election is over and the new administration will begin to highlight its propaganda, Zuckerberg has a change of heart.

Again, Zuckerberg is an opportunist: If he can position Facebook — and Threads, but to a lesser extent — as another MAGA-friendly news outlet, along the likes of Truth Social and X, chances are the new administration will start to give Meta free passes along the way. During Trump’s first term, Twitter was the place to know about what was happening in Washington. Trump’s team never gave information to the “mainstream media,” as it’s known in alt-right circles, instead opting for the Twitter firehose of relatively little editorialization. If Trump tweeted something, Trump tweeted it, and that was it; case closed. Zuckerberg wants to capitalize on Trump’s affinity for text-based social media, and the re-introduction of politics (i.e., “civic content”) aims to appeal to this affinity. If he’s good enough, Trump might throw Zuckerberg a bone, choosing to give Meta some of his precious content.

5/ Move our trust and safety and content moderation teams out of California, and our US content review to Texas. This will help remove the concern that biased employees are overly censoring content.

Meta has had fact-checkers in Texas for years, but Texas is as Republican as California is Democratic, so I don’t think the “concern” makes even a modicum of sense. Again, this is a capitulation to Trump’s camp, which perceives “woke California liberals” as out of touch with America and biased. In reality, there’s no proof that they’re any more biased than Republicans from Texas. Additionally, unless Meta is outsourcing content moderation to cattle fields in West Texas, cities in the state are as liberal — or even more liberal, as pointed out by John Gruber at Daring Fireball — as California, so this entire plan is moot. For all we know, it probably doesn’t exist at all.

I say that after reporting from Wired on Thursday claims sources in the company say “the number of employees that will have to relocate is limited.” The report also says that Meta has content moderators outside of Texas and California, too, like Washington and New York, making it clear as day that it’s just more bluff from Zuckerberg to appease the hard-core anti-California MAGA crowd.

6/ Work with President Trump to push back against foreign governments going after American companies to censor more. The US has the strongest constitutional protections for free expression in the world and the best way to defend against the trend of government overreach on censorship is with the support of the US government.

He’s not the president yet, but the last part of the final sentence makes Zuckerberg’s intentions throughout the whole thread strikingly obvious: “with the support of the U.S. government.” This entire thread is a love letter to the president-elect, who, in four days, has the power to bankrupt Meta in a matter of weeks. He controls the Federal Communications Commission, the Federal Bureau of Investigation, the Federal Trade Commission, and the Justice Department — he could just take Meta off the internet and call it a day. He could throw Zuckerberg in prison. There aren’t any checks and balances in Trump’s second term, so to do business in Trump’s America, Zuckerberg needs his blessing.

After his word salad thread on Threads, Zuckerberg did what any smooth-brained MAGA grifter would do: join Joe Rogan, the popular podcaster, on his show to discuss the changes. Adorned with a gold necklace and a terrible curly haircut, Zuckerberg bashed diversity, equity, and inclusion programs — which Meta would go on to gut entirely — defended his policy that allows Meta users to call women household objects and bully gay people and gay people only, and lamented that his company had too much “feminine energy.” And he bashed Biden administration officials for “cursing” at Meta employees to remove vaccine misinformation, but that’s the usual for Zuckerberg these days. The Rogan interview — much like Joel Kaplan, Meta’s new policy chief, going on Fox and Friends to advertise the new policy — was a premeditated move to promote the idea that hateful speech is now sanctioned on Meta platforms to the people who would be the most intrigued: misogynistic, manosphere-frequenting Generation Z and Millennial men.

The Rogan interview — which I, a Generation Z man, chose not to watch for my own sanity — is a fascinating look at Zuckerberg’s inner psyche. Here is Elizabeth Lopatto, writing for The Verge:

On the Rogan show, Zuckerberg went further in describing the fact-checking program he’d implemented: “It’s something out of like 1984.” He says the fact-checkers were “too biased,” though he doesn’t say exactly how…

Well, Zuckerberg’s out of the business of reality now. I am sympathetic to the difficulties social media platforms faced in trying to moderate during covid — where rapidly-changing information about the pandemic was difficult to keep up with and conspiracy theories ran amok. I’m just not convinced it happened the way Zuckerberg describes. Zuckerberg whines about being pushed by the Biden administration to fact-check claims: “These people from the Biden administration would call up our team, and, like, scream at them, and curse,” Zuckerberg says.

Did you record any of these phone calls?” Rogan asks.

“I don’t know,” Zuckerberg says. “I don’t think we were.”

But the biggest lie of all is a lie of omission: Zuckerberg doesn’t mention the relentless pressure conservatives have placed on the company for years — which has now clearly paid off. Zuckerberg is particularly full of shit here because Republican Rep. Jim Jordan released Zuckerberg’s internal communications which document this!

In his letter to Jordan’s committee, Zuckerberg writes, “Ultimately it was our decision whether or not to take content down.” “Like I said to our teams at the time, I feel strongly that we should not compromise our content standards due to pressure from any Administration in either direction – and we’re ready to push back if something like this happens again.”

“Ultimately it was our decision whether or not to take content down.” So, by Zuckerberg’s own admission, it was never the Biden administration that forced Meta to remove content — it was on Zuckerberg’s volition after prompting from the administration. This was backed up by the Supreme Court in Murthy v. Missouri, where the justices, back last June, said that the government simply requested offending content to be removed. Murthy v. Missouri was tried in front of the Supreme Court by qualified legal professionals, and Zuckerberg, for a sizable portion of the Rogan interview, lied through his teeth about its decision. This has already been decided by the courts! It is not a point of contention that the Biden administration did not force Meta to remove content; doing so would be a violation of Meta’s First Amendment rights.

Back to Zuckerberg’s psyche: This sly admission, like many others in the interview, is a peek into Zuckerberg’s blether. His nonsense thread is a love letter to the Trump administration written just the way Trump would: with no factual merit, long-winded rants about free speech and over-moderation, and no substantive remedies. I always like to say that if someone tells blatantly obvious lies, it’s safe to assume even the less conspicuous claims are also fibs. That, much like it does to Trump, applies perfectly to Zuckerberg — a crude, narcissistic businessman.

As I wrote earlier, Zuckerberg got his great idea after observing how Musk, the owner of X, got into Trump’s inner circle. Musk and Trump are notoriously not friends; Trump a few years ago posted about how he could have gotten Musk to “drop to your knees and beg.” Nevertheless, Musk is one of Trump’s key lieutenants in the transition, giving Zuckerberg hope that he, too, can get out of the “we’ll-throw-him-in-prison” zone. Tim Cook — Apple’s chief executive who donated $1 million to Trump’s inaugural committee and is set to attend the event January 20 — got his way with Trump in a similar fashion, posing with the then-president at a factory in Austin, Texas, where Mac Pro units were being assembled in 2019. (Those old enough to remember “Tim Apple” will recall the business-oriented bromance between Trump and Cook.) Cook is doing it again this year, making it harder for Zuckerberg to fit in amongst his biggest competition. His solution: Get Trump to hate Apple. Here is Chance Miller, reporting for 9to5Mac:

Zuckerberg has long been an outspoken critic of App Store policies and Apple’s privacy protections. In this interview with Rogan, the Meta CEO claimed that the 15-30% fees Apple charges for the App Store are a way for the company to mask slowing iPhone sales. According to Zuckerberg, Apple hasn’t “really invented anything great in a while” and is just “sitting” on the iPhone.

Zuckerberg also took issue with AirPods and the fact that Apple wouldn’t give Meta the same access to the iPhone for its Meta Ray-Ban glasses.

Zuckerberg, however, said he’s “optimistic” that Apple will “get beat by someone” sooner rather than later because “they’ve been off their game in terms of not releasing innovative things.”

Miller’s piece includes a litany of great quotes from the interview, including Zuckerberg’s seemingly never-ending aspersions about Apple Vision Pro and iMessage’s blue bubbles. In response to the article, Zuckerberg posted this gold-mine foaming-at-the-mouth reply on Threads:

The real issue is how they block developers from accessing iPhone functionality they give their own sub-par products. It would be great for people if Ray-Ban Meta glasses could connect to your phone as easily as airpods, but they won’t allow that and it makes the experience worse for everyone. They’ve blocked so many things like this over the years. Eventually it will catch up to them.

Wrong, wrong, wrong. Again, never put it past a liar to lie incessantly at every opportunity. As I wrote in my article about Meta’s interoperability requests under the European Union’s Digital Markets Act, Apple already has a developer tool for this called AccessorySetupKit, with the only catch being that the tool doesn’t allow developers to snoop on users’ connected Bluetooth devices and Wi-Fi networks, which wouldn’t be so great for Meta’s bottom line. So, for offering a tool that doesn’t allow Meta to abuse its monopoly over smart glasses and social networks to harm consumers, Apple gets hit with the “sub-par products” line. As an example, Apple’s biggest software competitor is Google, which makes Android, and Google never calls Apple products sub-par. As a businessman, calling a competitor’s product “sub-par” is just a sign of weakness.

But this weakness isn’t coincidental. Apple is facing one of the biggest antitrust lawsuits in its history, and Trump — along with Pam Bondi, his nominee for attorney general — has the power to halt it instantly the moment he takes office. If Zuckerberg can get on Trump’s good side and paint Apple as a greedy, anti-American corporation in the next few days before the transition, he hopes it can outweigh Cook’s influence on the house of cards just long enough for the case to go to trial.

And besides, Meta hasn’t invented anything other than Facebook itself two decades ago. Its largest platforms — Instagram, WhatsApp, and Meta Quest — were all acquisitions; its new text-based social media app, Threads, is a blatant one-for-one copy of Twitter’s 16-year-old idea; its large language model trails behind ChatGPT, its content moderation ideas are stolen straight from X’s playbook; and its chat apps use Signal’s encryption protocol. Meta is not an innovator and never has been one — every accusation is a confession. But, again, none of this logic is at the heart of Zuckerberg’s case or is really even relevant to analyze the brazen changes coming to Meta’s platforms.

The Rogan interview — along with the major policy changes on Meta platforms announced just about a week before Trump’s inauguration — was a strategic, calculated public relations maneuver from Zuckerberg and his tight-knit team of close advisers. He and his company have a lot to gain — and lose — from a second Trump administration, and so does his competition. But Zuckerberg, along with the wide range of tech leaders from Shou Chew of TikTok to Jensen Huang of Nvidia, understands that the best way to remain at the top for just long enough is to take down the competition and play a little game of “The Apprentice.”

In the end, all of this will be over in about a year, tops. In the Trump orbit, nothing ever lasts for too long. It really is a delicate house of cards, formed with bonds of bigotry and corporate greed. While Zuckerberg may be on Trump’s good side leading up to the inauguration, he might be bested by Musk’s X or Chew’s TikTok, both of whom are in desperation mode. Only one can win: If TikTok does, Zuckerberg is out of the tournament; if Zuckerberg wins, Musk makes the embarrassing walk back to the failure that plagued the first X.com. And if Zuckerberg wins, this country is in for a hell of a ride. Make America sane again.

Solar, Monitors, and Chatbots: The Best of the CES Show Floor

The interestingness is hiding between the booths

The show floor of CES 2025. Image: Media Play News.

The show floor of CES 2025. Image: Media Play News.

On Tuesday, doors to the show floor opened at the Consumer Electronics Show in Las Vegas, letting journalists and technology vendors alike explore the innovations of companies small and large. Over Tuesday and Wednesday, I tried to find as many hidden gems as I could, and I have thoughts about them all — everything from solar umbrellas to fancy monitors to new prototype electric vehicles. While Monday, as I wrote earlier, was filled with boring monotony, I enjoyed learning about the small gadgets scattered throughout the massive Las Vegas Convention Center. While many of them may never go on sale, that is mostly the point of CES — spontaneity, concepts, and intrigue.

Here are some of my favorite gadgets from the show floor over my last two days covering the conference.

Razer’s Project Arielle Gaming Chair

Image: Razer.

Image: Razer.

Razer on Tuesday showcased its latest gaming-focused prototype: a temperature-controlled chair. Razer is known for whacky, interesting concepts, such as the modular desk it unveiled a few conferences ago, but its latest is a product I didn’t know I needed in my life. Project Arielle is a standard-issue mesh gaming chair — specifically, Razer’s Fujin Pro — equipped with a heating and cooling fan system placed at the rear, near the spine. The fan pumps either hot or cool air through tubes that travel through the seat cushion and terminate at holes in the cushion, controlling the seat’s temperature.

The concept has multiple fan speeds and, in typical Razer fashion, is adorned with colorful LED lights. The prototype functions similarly to perforated car seats found in luxury vehicles, such as early Tesla Model S and X models, but connects to a wall outlet for power; it does not have a battery, meaning that if the cable is disconnected, the temperature control will no longer function.

I think the idea is quite humorous, but it does have some real-life applications in very warm or cold climates. It’s less of a gaming product as much as it is a luxurious, over-engineered seating apparatus. Because of how over-engineered the product is and how difficult it ought to be to manufacture reliably, chances are it will never see the light of day and become available for purchase. But concepts like these make CES exciting and interesting to cover.

GeForce Now Support Coming to Apple Vision Pro

Image: Nvidia.

Image: Nvidia.

Nvidia, after its jam-packed keynote on Monday night, announced in a press release that its GeForce Now game streaming platform would begin supporting Apple Vision Pro through Safari. The company said the website would begin working when an update comes “later this month,” but it is unclear how it will function since GeForce Now runs in a progressive web app, which Apple doesn’t support on visionOS. I assume the Apple Vision Pro-specific version of the website omits the PWA step, which would require some form of collaboration with Apple to ensure everything works alright.

As I have written many times before, Nvidia and Apple have a strained relationship after the 2006 MacBook Pro’s failed graphics processors. But it seems like the two companies are getting along better now since Nvidia now heavily features Apple Vision Pro in its keynotes and works with Apple on enterprise features for visionOS. I’m glad to see this progression and hope it continues, as much of the groundbreaking technology best experienced on an Apple Vision Pro is created using Nvidia processors. Still, though, it’s a shame there isn’t a visionOS-native GeForce Now app that would alleviate the pain of web apps. Apple’s new App Store rules permit game streaming services to do business on the App Store, so it isn’t a bureaucratic issue on Apple’s side that prevents a native app.

Technics’ Magnetic Fluid Drivers

Image: Panasonic.

Image: Panasonic.

Technics, Panasonic’s audio brand, announced on Tuesday a new version of its wireless earbuds with an interesting twist: drivers with an oil-like fluid inside between the driver itself and voice coil to improve bass and limit distortion. According to the company, the fluid has magnetic particles that create an “ultra-low binaural frequency,” producing bass without distortion.

This is the kind of nerdery that catches my eye at CES: Most earbuds with small drivers typically have to prioritize volume over fidelity to compensate for the minuscule apparatus that makes the noise. As volume increases, the driver reaches its capacity — the maximum or minimum frequency it can produce — quicker. The magnetic fluid drivers aim to broaden this threshold to 3 hertz from the typical 20 hertz at its lowest, therefore producing better bass with low distortion at even high volume levels.

It’s only a matter of time before reviewers evaluate Technics’ claims — the earbuds go on sale this week for $300, $50 less than Apple’s AirPods Pro, the gold standard for truly wireless earbuds. They support Google’s Fast Pair protocol for auto-switching and easy pairing, à la AirPods, have voice boost features like Voice Focus AI to improve call quality, and customize active noise-cancellation for each ear. But these features are standard for flagship earbuds — it’s the driver fluid that makes them compelling.

Movano’s Health-Focused AI Chatbot

Image: Movano.

Image: Movano.

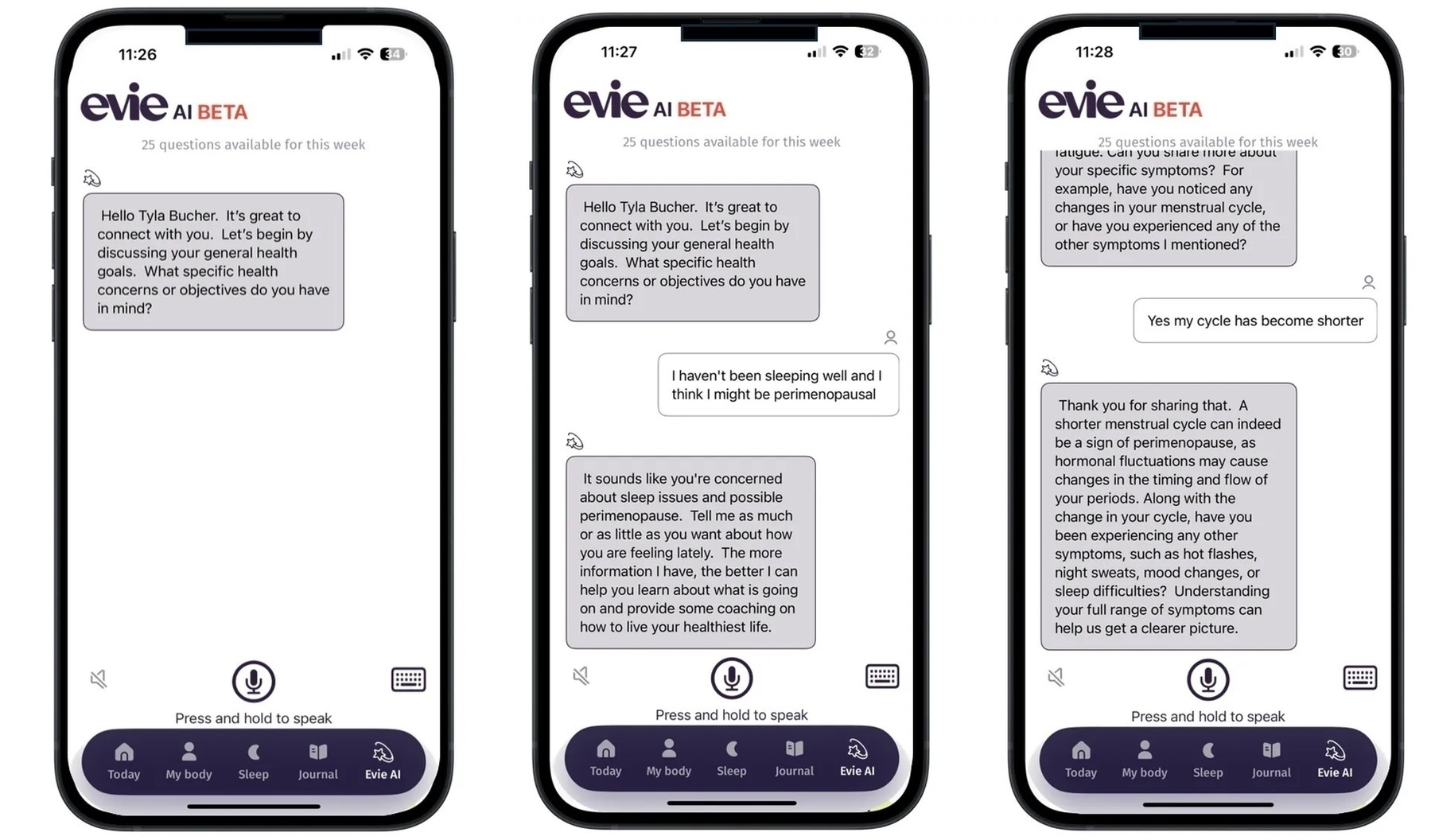

Movano, the little-known smart ring maker, announced on Tuesday a new artificial intelligence chatbot trained specifically on medical journals to provide correct, appropriate answers to medical questions. Movano claims the chatbot, EvieAI, is only trained on 100,000 peer-reviewed journals written by medical professionals and cross-checks information with accredited medical institutions like Mayo Clinic before producing a response. The company says the chatbot answers medical queries with an astonishing 99 percent accuracy, but it did not give a demonstration to members of the press.

My first instinct upon reading Movano’s press release was that WebMD, the easy-to-understand medical answers website, has finally met its first real AI competition. I still believe that to be the case, but chances are many people are more likely to trust a website with a byline over an AI-generated answer. And all it takes is one flub for EvieAI to be entirely wiped off the market and for Movano to never be trusted with AI again because the stakes are so high in medicine. I can see the tool being helpful for summaries and those “Click for Help!” chat pop-ups on some medical websites, but I still don’t think it should be trusted.

I do think AI chatbots will eventually advance to the point of reliability, but the lack of trustworthiness isn’t due to a shortage of reliable information on the internet — it’s because chatbots don’t know what they’re saying. This is an inherent limitation of large language models, and the only way to solve it is by building a helper bot that fact-checks the main language model. Even ChatGPT isn’t that sophisticated yet, so I doubt EvieAI is. Fine-tuning the scope of available training data does give the chatbot less information to make mistakes with, but ultimately, all the model knows how to do is break down words into tokens, do some pattern matching, and convert the tokens back to prose again. Narrowing the total amount of tokens reduces the likelihood for bad tokens to be generated, but it’s still a black box.

Honda Zero

Image: Honda.

Image: Honda.

Honda on Tuesday announced two more concept vehicles to join its Honda Zero lineup of fully electric autonomous cars, first unveiled last year at CES. The two models follow in the footsteps of last year’s concepts, except Honda is more bullish on selling them, with the company stating it will begin production of the two vehicles “by 2026.” (It did not offer a concrete release timeline.)

Honda’s two new models, the Honda 0 SUV and Honda 0 Saloon, feature an unusual, strange, Cybertruck-esque design with boxy edges, flushed door handles, and no side mirrors. The Honda 0 Saloon almost is reminiscent of a Lamborghini Aventador, with a sloping hood, but appears like it’s straight from the future. Neither vehicle looks street legal, and no other specifications were provided about them or their predecessors from last year.

Honda, however, did provide some details about the cars’ operating system, which it calls Asimo OS, named after the company’s 2000s-era humanoid robot. Honda was vague about details but said Asimo will allow for personalization, Level 3 automated driving, and an AI assistant that learns from each driver’s driving habits. Honda plans to achieve Level 3 autonomy — which allows a driver to take their hands and feet off the wheel and pedals — by partnering with Helm AI as well as investing more in its own AI development to teach the system how to drive in a large variety of conditions. The company said the Level 3 driving would come to all Honda Zero models at an “affordable cost.”

I never trust this vague vaporware at CES because more often than not, it never ships. Neither of the vehicles — not the ones announced a year ago, nor the ones from this year — looks ready for a drive, and Honda gave no details on what it would do next to develop the line further. As I wrote on Monday, CES is an elaborate creative writing exercise for the world’s tech marketing executives, and Honda Zero is a shining example of that ethos.

BMW’s New AR-Focused iDrive

Image: BMW.

Image: BMW.

BMW, known for its luxury “ultimate driving machines,” announced an all-new version of its iDrive infotainment system centered around an augmented reality-powered heads-up display. Eliminating the typical instrument cluster, the company opted to project important driving information on the windshield itself, communicating directions and controls via an AR projection on the road. The typical infotainment screens still remain below the windshield, accessible for all passengers, but driver-specific information is now overlaid atop the road to limit distractions.

The new system is scheduled to appear in a sport utility vehicle later this year built on BMW’s Neue Classe architecture, which the company first announced at CES 2023. But the choice to digitize previously analog controls in a vehicle beloved by many for being tactile and sporty is certainly a bold design move — and I’m not sure I like it. The dashboard now looks too empty for my liking, missing the buttons and dials expected on a high-end vehicle. Truthfully, it looks like a Tesla, built with less luxurious materials and with no design taste. As Luke Miani, an Apple YouTuber, put it on the social media website X, “Screens kill luxury.”

I also think that while the AR directions are handy, the overall experience is more irritating and distracting than typical gauges. The speedometer should always be slightly below the windshield so that it is viewable in the periphery without occupying too much space in a driver’s field of view. The new system looks claustrophobic, almost like it has too much going on in too little space. I’ll be interested to see how it looks in a real vehicle later in the year, but for now, count me out.

Delta’s New Inflight Entertainment Screens

Image: Delta Air Lines.

Image: Delta Air Lines.

Delta Air Lines, at a flashy press conference at the Las Vegas Sphere Tuesday evening, announced updates to its seat-back entertainment and personalization at its 100th anniversary keynote. The company said that it would begin retrofitting existing planes with new 4K high-dynamic-range displays and a new operating system, bringing a “cloud-based in-flight entertainment system” to fliers.

Delta also announced a partnership with YouTube, bringing ad-free viewing to all SkyMiles members aboard. The company announced no other details, but it’s expected that the inflight system will include the YouTube app in retrofitted planes. The new system also supports Bluetooth, has an “advanced recommendation engine,” and allows users to enable Do Not Disturb to notify flight attendants not to disturb them.

Delta said the new planes would begin arriving later this year but had no word on updates to WI-Fi, including Starlink, which its competitor United Airlines announced late last year would be coming to its entire fleet in a few years. I still believe Starlink internet is more important than any updates to seat-back entertainment screens, as most people usually opt for viewing their own content on personal devices.

Anker’s Solar Umbrella

Image: Anker.

Image: Anker.

Anker announced and showcased on the show floor this week an umbrella made of solar panels for the beach. The umbrella, called the Solix Solar Beach Umbrella, has a new type of perovskite solar cells that are up to double as efficient as the standard silicon-based cells found in most modern solar panels, according to Anker. Perovskite cells can be optimized to absorb more blue light, which explains how Anker is achieving unprecedented efficiency.

The Solix Solar Beach Umbrella connects to the company’s EverFrost 2 Electric Cooler, which also comes equipped with outlets to charge other devices using the solar power generated by the umbrella. The umbrella charges the cooler’s two 288-watt-hour batteries at 100 watts, which can then power devices at up to 60 watts through the USB-C ports. Anker plans to ship the cooler in February and the umbrella in the summer, with the former starting at $700 and the latter’s price yet to be determined.

I’ve never seen a perovskite solar panel before, so the umbrella caught my eye for its efficiency. Typically, solar-powered outdoor gear isn’t worthwhile because it doesn’t generate as much power as connected devices use — it’s more suited for long-term solutions like a home during the day when nobody is using power and the batteries can charge. But the perovskite cells change the equation and make Anker’s product much more compelling for long beach days or even camping trips since the umbrella can be used as practically a miniature solar farm to power the company’s batteries, even in low-light conditions.

LG UltraFine 6K

Image: LG.

Image: LG.

LG announced over the weekend and showcased on the show floor a 6K-resolution, 32-inch monitor to compete with Apple’s Pro Display XDR. The product ought to have tight integration with macOS, similar to LG’s other UltraFine displays, which are even sold at Apple Stores alongside the Studio Display. Due to its resolution, the monitor has a perfect Retina pixel density, just like Apple’s first-party options, making it an appealing display for Mac designers and programmers.

The display is an LCD, however, and is the first to use Thunderbolt 5, which Apple’s latest MacBooks Pro with the M4 series of processors support. I assume the LCD display — which is bound to be color-accurate, like LG’s other displays — will drastically lower the cost, making it around $2,500, similar to Dell’s uglier but similarly specced offering. LG offered no other specifications, including a release date.

I assume this monitor will be a hit since it would be the third 6K, 32-inch monitor on the market — perfect for Mac customers who want perfect Retina scaling. The Pro Display XDR isn’t expected to be refreshed anytime soon, and some people want a larger-than-27-inch option, leaving only Dell’s the only option, which is less than optimal due to its design and lack of macOS integration. LG’s UltraFine displays, by comparison, turn on the moment a Mac laptop is connected or a key is pressed, just like an Apple-made display. LG’s latest monitor also looks eerily similar to the Pro Display XDR, leading me to believe it’s intended for the Mac. This is one of the most personally exciting announcements of CES this year.

Sony Honda Mobility’s Afeela

Image: Sony Honda Mobility.

Image: Sony Honda Mobility.

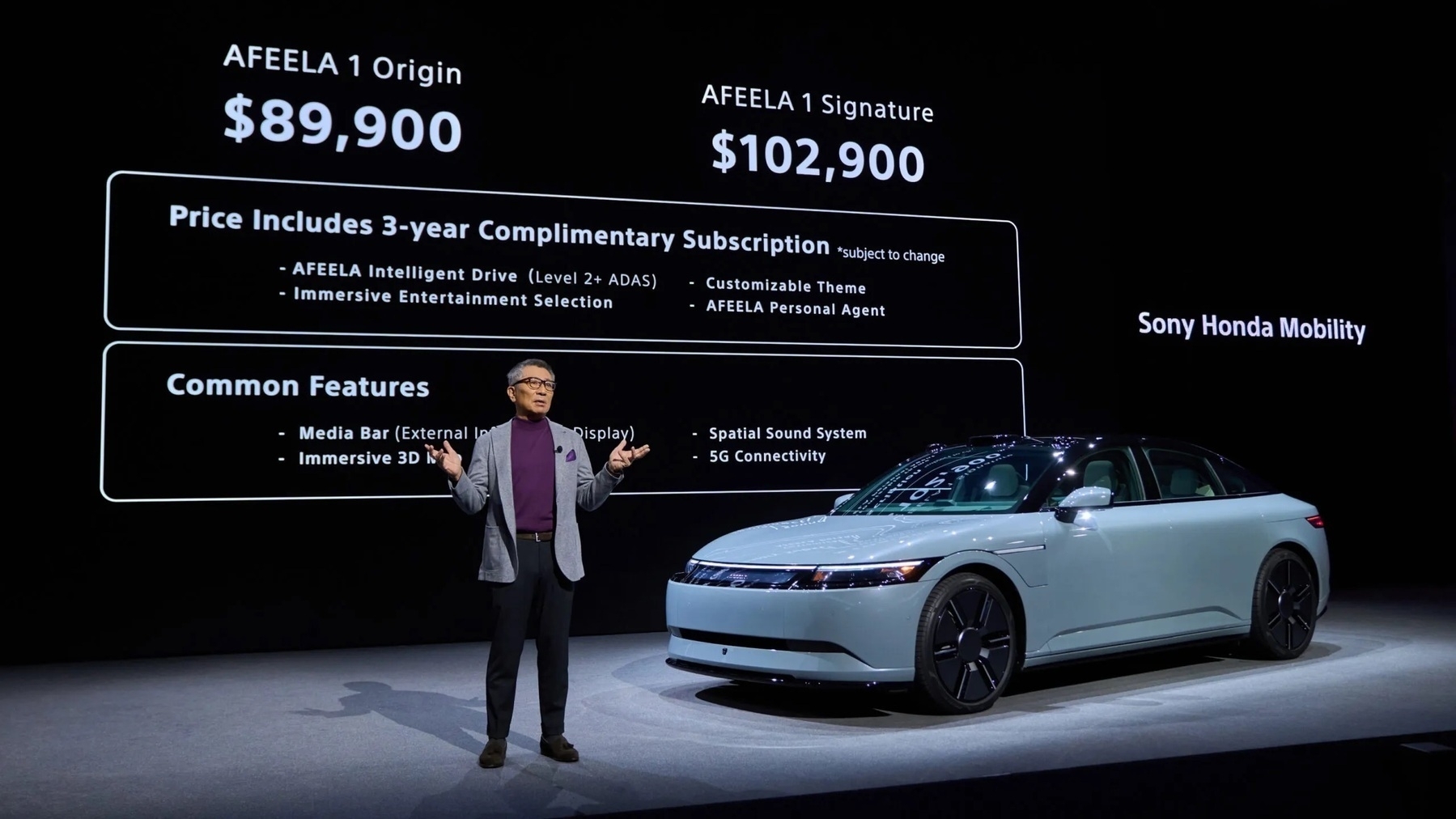

Sony first announced the Afeela electric vehicle in collaboration with Honda at CES 2023 but offered no details on pricing, availability, or specifications for two years while teasing the car’s supposed self-driving functionality and infotainment system. Now, that has changed: the venture announced final pricing for two trims as well as availability for the first units.

On Tuesday, Sony made the Afeela 1 available for reservation. The regular trim is $90,000, and the premium one is $103,000, with three years of self-driving functionality included in the price. (How generous.) Reservations are $200 and fully refundable, but interestingly, they are only limited to residents of California, which Sony says is because of the state’s “robust” EV market. The rest of the contiguous United States also has a robust EV market, and the vehicles are assembled in Ohio, which leads me to believe the limit is because Sony can’t produce enough vehicles for the whole country.

But I think that’s the least of the company’s problems. The $103,000 version is the first to ship, with availability scheduled for sometime in 2026; the more affordable $90,000 trim is scheduled for 2027. This realistically means early adopters will opt for the more expensive trim, which is truly very expensive. $100,000 can buy some amazing cars already on the market, and the Afeela has nothing to offer for the price. It is only rated for 300 miles of range, and the company provided no horsepower or acceleration numbers. It’s also unclear if the car has a Tesla charging port for use with the Supercharger network or if it’s stuck with a traditional combined charging system — commonly known as CCS — connector.

Sony provided no timeline for when the vehicle would come to the rest of the United States, which leads me to believe that the entire venture is a pump-and-dump scheme of sorts: sell under 100 vehicles only in California in 2026, cancel the 2027 version, and shut down the project by the end of the decade. That way, Sony and Honda both lose nothing, and nobody buys a car that doesn’t work. The entire deal seems incredibly unscrupulous to me, knowing the fact that the company is opening two “delivery hubs” in Fremont and Torrance, California, where interested customers will be able to take test drives. The whole thing seems like a proof of concept rather than a full-fledged vehicle.

If I were a betting man, I would say that the Afeela will never become a true competitor in the EV market — ever.

The CES show floor was certainly more exciting than the press conferences from Monday, but there’s still a lot to be uncovered. That’s not a bad thing, or even unexpected, but it’s something to be cautious of when following the news out of CES closely. I still stick to my opinion that this year’s show is one of the most boring in recent years, but that doesn’t mean everything was bad.

AI, GPUs, and TVs: A Diary From CES 2025 Day 1

Maybe CES has hit rock bottom, after all

CES 2025 began Monday in Las Vegas. Image: The Associated Press.

CES 2025 began Monday in Las Vegas. Image: The Associated Press.

On the first day of the Consumer Electronics Show in Las Vegas, I completed my usual routine: I tuned into the big-name press conferences, took notes, caught up on social media reactions, and repeated until the news ran out and the sun set over the valley. CES hasn’t been about consumer technology as much as it has been about vibes, thoughts, and marketing for a while, but that is the inherent appeal of the show as it stands. In a fragmented, messy media environment, it is hard to get a gist of what the people who make the technology think sticks.

People often correlate marketing with greed: that companies only market products that are the best for them, not us. That is a true but incomplete assertion because marketing executives are of low intelligence. Spending an enormous amount to advertise congestive heart failure doesn’t make it any the more appealing because people generally do not want their heart to fail. That might be a humorous and irrelevant example, but marketing executives and consumers aren’t stupid. The colloquial expression, “You can’t polish a turd,” expresses this succinctly. If something is being marketed heavily, it is almost certain that it is viewed positively amongst the target audience.

CES isn’t about heart failure or marketing strategies; it is about generative artificial intelligence for the second year in a row. The AI boom hasn’t died, and I don’t think it ever will because it’s popular amongst the marketing crowd. It is OK to quibble about the popularity of generative AI — in fact, it’s healthy. But you can’t polish a turd. Money doesn’t grow on trees — if generative AI never stuck, technology’s biggest week wouldn’t be enveloped by it in the way it has been. “Big Tech” firms know better than to waste a free week in front of the media.

As I began looking over my notes, I tried to search for a theme so I could build a lede with it. But it quickly struck me that if a $3,000 supercomputer by a processor company was the most intriguing product I saw at the world’s largest technology trade show, perhaps CES has lost its fastball. These days, CES is emotionally cumbersome to cover because of just how much it has dwindled in recent history. There is an adage in the tech journalism sphere that nothing at CES is real and that it’s all a marketing mirage for the media. But now, the problem is that CES is almost too genuine to the point where a trade show that once was known for surprise and delight turned into a sea of monotony.

Last year, at CES 2024, generative AI was relatively new, and that made it genuinely exciting. It’s correct to contradict the rosiness with a brief reminder that the number of times “AI” was uttered during each keynote was nauseating, but it isn’t like this year was any different. Silicon isn’t exciting anymore, and all the industry decided to offer for 2025 was silicon. Intel, Advanced Micro Devices, Nvidia — they’re all the same, ultimately. I bet any “analyst” reading that last sentence is now suffering from an aneurysm because it’s a gross oversimplification of the entire silicon industry, but it’s true. Silicon suffers from the same stagnation smartphones did four years ago. New neural processing cores and ray tracing have never been the bread and butter of CES.

Similarly, every smart home product felt like beating a dead horse. Matter promised to be a smart home standard that made most accessories platform-agnostic, meaning they could be used with Google Home, Apple’s HomeKit, and Amazon’s Alexa all at once. (It’s not to be confused with Thread, which is a mesh networking connectivity protocol, not a standard.) But with the influx of Matter products in recent history, it isn’t the lack of adoption that bothers me, but reliability. The platform agnosticism was only rolled out about a year ago and still is unreliable, with Jennifer Pattison Tuohy, a smart home reporter for The Verge, calling it “completely broken” in late 2023. Since then, Matter has improved, but variably.

And CES, for better or worse, always seems to have the most televisions than any trade show by far — reliably. But this year, the main attraction wasn’t new display panels or considerable improvements to picture quality, but Microsoft Copilot in LG and Samsung TVs. Again, it’s hard not to believe the industry is headed in the wrong direction. CES in its prime existed to showcase the gadgets nobody would ever buy — think rollable phones and see-through televisions. But the politics of making maximum profit per dollar spent on constructing fancy exhibitions seems to have watered down the spontaneity that once brought reporters to CES. Marketing executives aren’t stupid, but as the day went by, I kept wishing they were.

Still, I worked through the pain and my misgivings about the show to compile a list of some of my favorite finds from the first day of what I feel will become a grueling three days of press conferences going over incremental product updates. The resulting chronicle is one of incremental updates, somewhat surprising numbers, and a story of marketing and consumerism hiding between the lines.

Intel

Image: Intel.

Image: Intel.

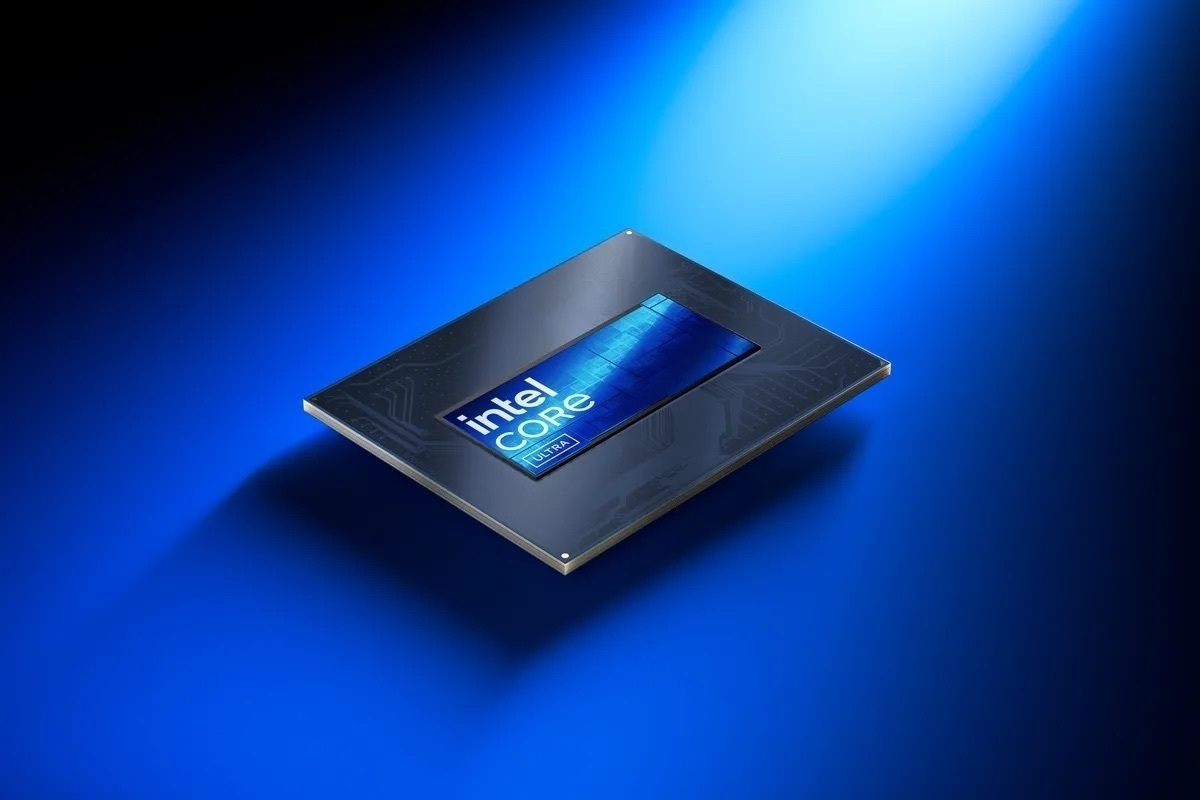

Anyone with even a slight modicum of knowledge about the current state of the silicon industry knows Intel is in hot water. It spun off its foundry business due to dwindling profits, abruptly fired its technically minded chief executive over those dwindling profits, and has been consistently behind in every market for years. Its chief competitor, Advanced Micro Devices, is running laps around it in nearly every important benchmark; Nvidia makes its graphics processing units look like toys; and it lost its most important business partner, Apple, four years ago. Intel, by any objective measurement, is doing awfully, both morale-wise and economically. After its CES 2025 announcements — and the subsequent ones from AMD and Nvidia — its stock price fell to its lowest since the firing of Pat Gelsinger, its prior chief executive.

Yet, the company is still making moves, though perhaps in the wrong direction. On Monday, it announced a line of processors called Arrow Lake, meant to be the successor to its Raptor Lake series, announced at CES last year. The Arrow Lake processors Intel announced Monday are meant for gaming laptops from the likes of Asus, not Copilot+ productivity-oriented PCs. (Lunar Lake, Intel’s bespoke AI chip, will still be used in the latter category for the foreseeable future.)

Intel claims Arrow Lake’s gaming variants offer 5 percent better single-threaded performance and 20 percent improved multithreaded over its Raptor Lake processors from last CES, and Arrow Lake models will ship with Nvidia’s 50-series graphics cards, adding to the performance increases. Other, non-gaming-focused laptops will use the H-model processors, and Intel claims their single-threaded performance will be up to 15 percent better. Other variants, like the U-series for ultra-low power consumption, were also announced.

The 200HX series, used in gaming laptops, won’t ship in products until late in the first quarter of the year, the company says, while the 200H and 200U chips have already begun production and will be in laptops in just a few weeks.

I say Intel’s announcements are heading in the wrong direction because they don’t follow the pattern of every other hardware maker at CES. If anything, Intel should’ve one-upped its announcements by announcing a successor to Lunar Lake, its AI chip line, to compete with AMD and Nvidia, who juiced their announcements chock-full of AI hype just mere hours after Intel’s keynote address. That isn’t to say Intel’s presentation was entirely full of duds; the company also announced Panther Lake, its series of 1.8-nanometer processors using its 18A process, is shipping in the second half of 2025. But when Intel is reassuring analysts it’s not leaving the discrete GPU market and advertising a 4 percent increase in the PC market year-over-year, it’s hard to have any confidence in the company. Intel is directionless, and that became even more apparent at CES.

AMD and Dell

Image: AMD.

Image: AMD.

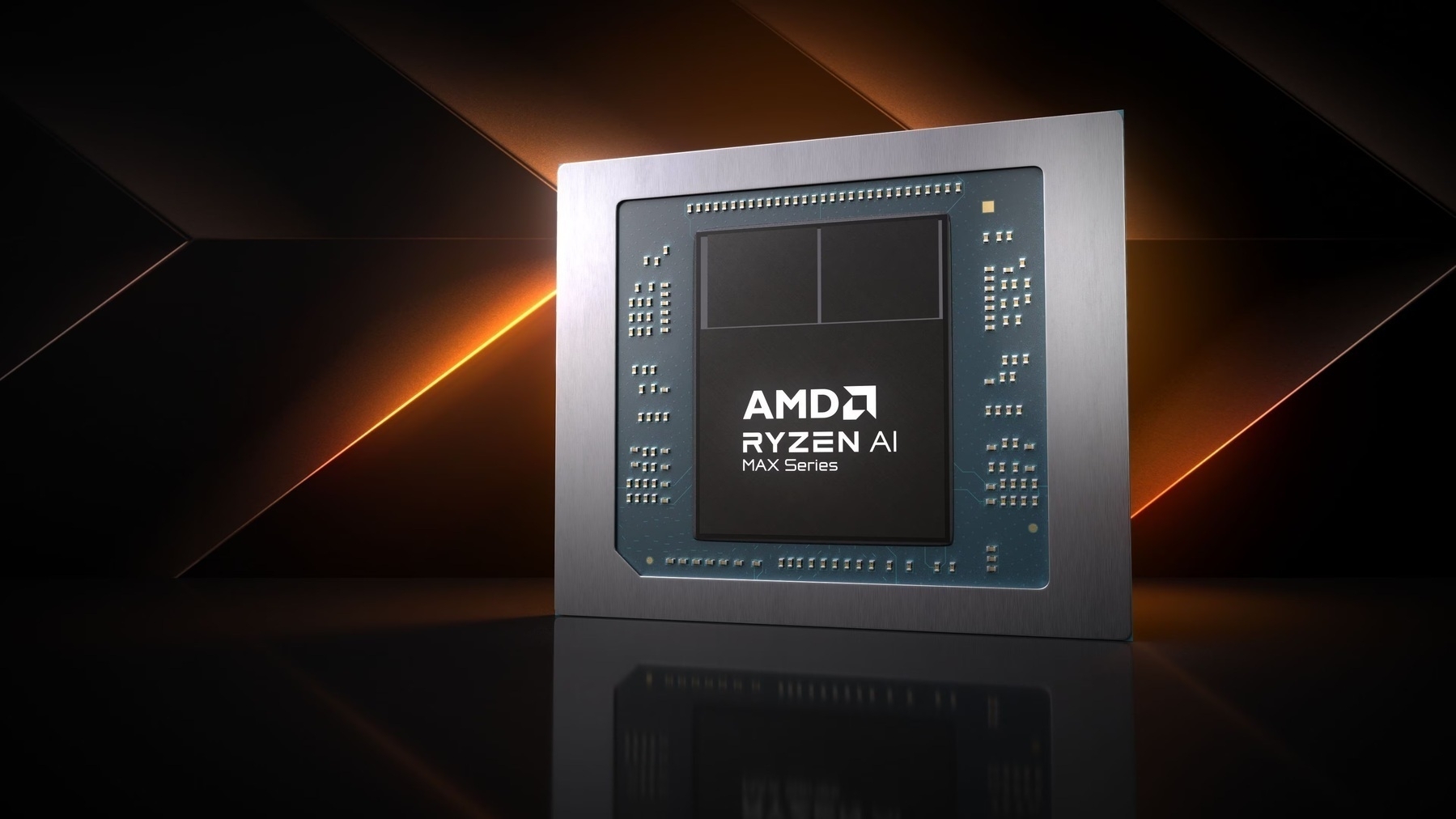

AMD’s keynote, similar to Intel’s, was off. For one, it didn’t bring out Dr. Lisa Su, its charismatic chief executive, to deliver the address. And it didn’t announce Radeon DNA 4, its next-generation GPU platform that powers the Radeon RX 9070, its latest GPU, onstage either, leaving it for a press release. Detractors online believe this is due to Nvidia’s announcements, while others think the lack of interesting announcements was due to Dr. Su’s absence. Instead, the CES presentation focused on its latest flagship processor, mobile chips, and new partnership with Dell.

The company announced the 9950X3D, its highest-end processor with 16 cores on the Zen 5 architecture, its latest. AMD claims it’s “the world’s best processor for gamers and creators,” with an 8 percent performance boost in games over the last-generation 7950X3D and a 15 percent increase in content creation tasks, such as video editing. But perhaps the most ambitious claim is that the processor is 10 percent faster than Intel’s latest, the Core Ultra 285K. These claims are yet to be tested, as the processor — along with its lower-end counterpart, the 12-core 9900X3D — will be available in March, but they seem respectable at first glance.

AMD spent most of its time, however, announcing its new lineup of mobile processors, called the Ryzen AI Max series. Both the Ryzen AI Max and AI Max Plus have AMD’s most powerful graphics, with up to 15 CPU cores — just like the 9950X3D, but in mobile form — 40 RDNA 3.5 compute units, and 256 gigabytes-a-second memory bandwidth. Together, AMD says the AI Max Plus beats Apple’s mid-range M4 Pro processor, announced late last year, yet probably with worse heat management and power consumption. Both Ryzen AI Max chips consume up to 120 watts of power at their peak, but AMD isn’t giving any details on thermal performance, as it most likely varies drastically between laptop models. The processors are Copilot+ PC-compliant and begin shipping in the first quarter of 2025, with the first computers being from Asus and a new HP Copilot+ mini PC, similar to Apple’s Mac mini.

Perhaps AMD’s strangest announcement at its press conference was its new partnership with Dell, a company that historically has always shipped Intel and Nvidia processors in its ever-popular laptops. To accompany the news, Dell announced it would overhaul its naming structure, ditching the XPS, Latitude, and Inspiron for three new variants: Dell, Dell Pro, and Dell Pro Max. The names are a one-to-one rip-off of Apple’s iPhone naming scheme, but it didn’t stop there — in addition to the three variants, each one has three specifications: Base, Premium, and Plus. This results in some extraordinary product names, like Dell Pro Max Plus, Dell Premium, and Dell Pro Base.

Image: Dell.

Image: Dell.

The internet has been ablaze with comedy for the past day, but seriously, these names are atrocious. Not only could Dell’s product marketing team not ideate a new branding strategy, but it chose to copy Apple’s worst naming scheme and then make it worse. Proponents of the new names say they make more sense than “Dell XPS,” where XPS originally stood for “Extreme Performance System,” but the new names just don’t logically connect. Dell Pro Base is a better product than Dell Premium, for instance. It’s a completely unintuitive, embarrassing system, destroying decades of brand familiarity with one misstep. Truth be told, it embodies the fundamental problem with CES.

Qualcomm

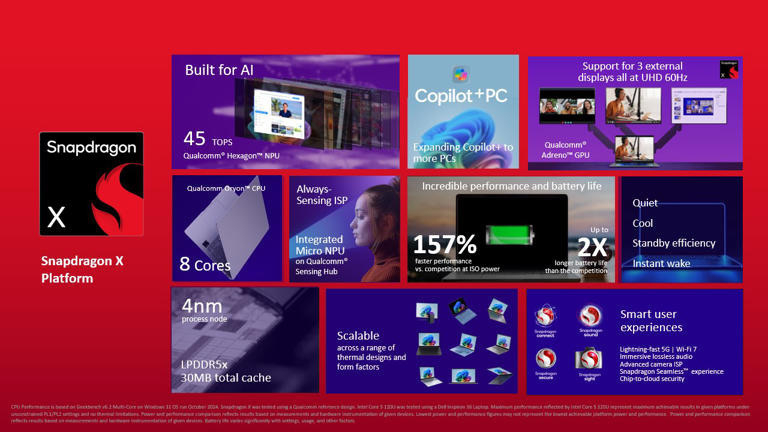

Image: Qualcomm.

Image: Qualcomm.

Qualcomm, Intel’s biggest foe, launched a new Copilot+-capable processor meant to power cheaper so-called “AI PCs” below $600. The processor, called Snapdragon X, has eight cores and a neural processing unit that performs 45 trillion operations per second, or TOPS. The processor joins the rest of Snapdragon’s Arm-based computer processor lineup; it’s now composed of the Snapdragon X, Snapdragon X Plus, and Snapdragon X Elite. The company says the processor will begin shipping in various devices from HP, Lenovo, Acer, Asus, and Dell in the first half of 2025.

The Snapdragon X will make Copilot+ PCs their cheapest yet, though Windows on Arm is still shaky, with many popular apps broken entirely or running in Compatibility Mode. Still, however, the chip will shake up the budget laptop business, putting Intel and AMD on their toes to develop cheaper Copilot+-capable processors. Currently, the only chips based on the x86 instruction set — the one used by Intel and AMD — are cost-prohibitive and flagship, which isn’t ideal for schools or corporate buyers.

The processor is built on Taiwan Semiconductor Manufacturing Company’s 4-nm process node, bringing “two times longer battery life than the competition,” according to Qualcomm. I haven’t seen any laptops at CES with the Snapdragon X chip yet, but I assume they’re coming in the next few months.

Samsung

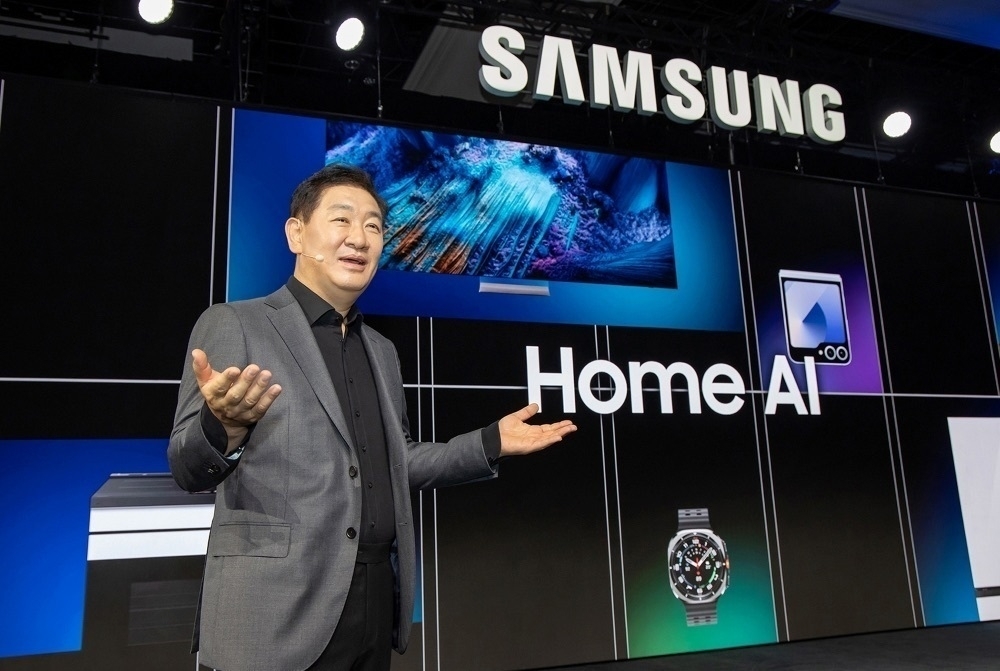

Image: Samsung.

Image: Samsung.

Samsung on Monday re-announced much of what it said last year at CES: AI, AI, AI. The company is bullish on AI in the smart home, emphasizing local AI processing and connectivity between various Samsung products, including SmartThings — its smart home specification — and Galaxy devices. The story is much of the same as last year, but the difference lies in semantics: While last year’s craze was about the technology itself and generative experiences, Samsung this time seems more focused on customer satisfaction, much like Apple. Whether that vision will pan out into reality is to be determined, but it sounds appropriate for the AI skepticism climate the world appears to live in currently.

Samsung calls the initiative “Home AI” — because, of course, everything deserves a brand name — and it evoked a half-futuristic, half-dystopian future of the smart home. For one, Samsung didn’t mention Matter in the AI portion of its presentation. It did eventually, in a separate, more smart home-oriented section of the keynote, but the omission seems to allude to the fact that Matter is flaky and unprepared for generative AI. Many of the things Samsung wants to do require a deep tie-in between hardware and software. For example, one presenter gave a scenario where a Galaxy Watch sensed a person couldn’t fall asleep and automatically set the thermostat to a lower temperature. That’s more than just the smart home: it’s a services tie-in. Dystopian, yet also eerily futuristic.

Samsung also emphasized personalization in its vibes-heavy and announcement-scant conference but put the ideas in terms of AI because CES is a creative writing exercise for the world’s tech marketing professionals. (See the beginning of this article.) Voice recognition and user interface personalization stood out as key objectives of the Home AI initiative — a presenter showcased an instance where a user, with high-contrast mode enabled on their smartphone, spoke to their dryer, which recognized their voice and automatically activated its own high-contrast accessibility settings. Whether that fits the new-age definition of “AI” is debatable, but it’s a perfect example of the Home AI initiative.

In a similar vein, Samsung finally announced a release date for its Ballie AI robot, which for years has promised a personalized AI future in the form of an adorable spherical floor robot with a built-in projector and speakers. Ballie was first demonstrated five years ago at CES 2020, but Samsung updated it at 2023’s show before even releasing the first generation. Now, Ballie is powered by generative AI — because of course it is — but retains much of the same feature set. Think of it as a friendlier, smaller version of Amazon’s Astro, a 2021-era robot that ran Alexa and cost an eye-watering $1,600. Ballie, like Astro, has a camera for home security but runs on SmartThings, allowing users to toggle other parts of their smart home via the robot. Ballie is shipping in the first half of the year, according to Samsung, but the company provided no concrete release date, price, or specifications.

Samsung also announced the successor to the company’s popular The Frame television: The Frame Pro. The Frame, for years, has been regarded as one of the most aesthetically pleasing televisions, not in terms of picture quality, but when it is turned off. The Frame can cycle through art and images and comes in a variety of finishes to complement a space, almost as if it’s an art installation rather than a TV. But The Frame has been plagued by software features, has mediocre image fidelity — it only has a quantum dot LED whereas most other TVs in its price range have organic-LED displays — and doesn’t get as bright as other LED TVs Samsung sells because of the anti-reflective coating, which helps display art more naturally.

The Frame Pro. Image: Samsung.

The Frame Pro. Image: Samsung.

The Frame Pro, by contrast, aims to address some of these issues. It now features a nerfed mini-LED display, which provides a boost in contrast and brightness since it splits the display panel into multiple local dimming zones. This way, only one part of the television can receive light while the other parts are completely off. The catch is that The Frame Pro’s display isn’t a true mini-LED panel, where the zones are spread throughout the display. (Every MacBook Pro post-2021 has a mini-LED display; to test it, go to a dark room, open a dark background with a white dot in the center of the screen, and observe the visible blooming behind that dot. That’s mini-LED’s dimming zones in action.) Instead, The Frame Pro has these dimming zones at the bottom of the screen, controlling the brightness vertically instead of in a grid pattern across the display.

I am sure this will provide some tangible difference knowing how bad the picture quality of the original Frame is in comparison to other high-end televisions, but I don’t think it will fully alleviate the pain of the matte display, which causes considerable color distortion and results in a washed-out picture. The Frame Pro also has a 144-hertz refresh rate, but because of Samsung’s abominable stubbornness to support Dolby Vision, it only has HDR10+, Samsung’s proprietary high dynamic range standard. Modern set-top boxes like the Apple TV support it, but content is scarce and not nearly as well-mastered as Dolby Vision. Really, The Frame Pro is still a compromise, and without a price, I’m unsure if the new features make it a better value over the equally compromised Frame.

Samsung’s announcements, while repetitive, were a good breath of fresh air after a packed morning full of processor updates. But none of its new products, unlike some other CES presenters’, has release dates, prices, or even concrete feature concepts. The entire address was one large, lofty, vibes-based presentation. I guess that fits the CES theme.

LG and Microsoft Copilot

Image: LG.

Image: LG.

LG began its announcements on Sunday, launching its 2025 television lineup infused with AI. But unlike tradition would call it, the AI wasn’t image-focused. There were modest improvements to AI Picture Pro and AI Sound Pro, but for the most part, it centered around Microsoft Copilot coming to webOS, with LG even going as far as to reprogram the microphone button to launch the AI assistant. A chatbot is built into the operating system, too, and the remote is now dubbed the “AI Remote.” (It’s worth noting Samsung is also adding Copilot to its TVs as well, though much less conspicuously.)

LG hasn’t detailed the Copilot integration yet, without even going as far as to add a screenshot to its press release — all the company has said is that the functionality is coming to the latest version of webOS with the new line of TVs, but with no release date. It’s unclear what Microsoft’s OpenAI-powered chatbot would do, but LG’s own bot would take the lead for most queries, with Copilot being used to look up additional information, says the company. Again, I’m unsure and skeptical about what “information” refers to, but that’s par for the course at CES.

It all circles back around to my lede, nearly 3,500 words ago: CES is an elaborate marketing exercise; sometimes, it delivers hits and otherwise duds. But there’s clearly some kind of pent-up demand for such a product, so much that both Samsung and LG partnered with Microsoft — which hasn’t created anything remotely close to television software in its entire corporate history — to integrate an AI chatbot within webOS and Tizen. It really is unclear what that pent-up demand entails, but what makes this year’s CES so odd is that the companies presenting this year don’t seem to be eager to showcase their latest technology freely. Intel, AMD, and Samsung have all disappointed with their announcements this year.

Either way, color me hesitant to welcome Copilot on my TV anytime soon.

TCL

The TCL 60 XE. Image: Allison Johnson / The Verge.

The TCL 60 XE. Image: Allison Johnson / The Verge.

TCL kept its announcements to a minimum at CES this year, launching a new Android phone called the TCL 60 XE that can switch between a full-color and e-ink-like display with just the flick of a switch at the back of the device. The feature is called Max Ink Mode, and it uses TCL’s Nxtpaper display technology to toggle between the two modes. Nxtpaper isn’t an e-ink display, but it mimics the functionality of e-ink through a standard LCD. The LCD has a reflective layer that eliminates backlight glare and diffuses light, thereby faking the matte, dull e-ink look without rearranging pigment particles using electricity. Because Nxtpaper is just a special LCD, it still operates like a normal screen until the switch is flipped, which changes the appearance of Android.

The TCL 60 XE, otherwise, is a typical Android budget phone, with a 50-megapixel rear camera, 6.8-inch display, and “all-day battery life.” No other specifications were given, but the product is promised to begin shipping in Canada by May and in the United States later this year. (It is exclusive to North America.)

TCL also announced a new projector, called the Playcube, which is an adorable cube-shaped modular device. No other details were provided, however, probably because it is most likely just a concept. But the Nxtpaper 11 Plus, the company’s next-generation tablet, did get more specifications: it features an 11.5-inch display built on Nxtpaper 4.0 and a 120-hertz refresh rate. Nxtpaper 4.0, according to TCL, uses improved diffusion layers to offer better sharpness and brightness. However, no pricing information and release date were issued by TCL in its press release.

TCL is always a vendor I enjoy hearing from at CES, mostly because it doesn’t have the bandwidth to put on its own extravagant events. While typical for the company, the Max Ink Mode really was intriguing to look at. TCL, however, didn’t introduce its full TV line at CES this year, which is atypical for a company that always seems to offer the largest screens at some of the lowest prices. It did preview a mini-LED one, however, but provided no other specifications or pricing.

Matter and the Smart Home

CES typically brings a plethora of smart home devices, and in recent years, it has become a breeding ground for Matter and Thread appliances. But as I said earlier, Matter continues to be an unreliable standard for most important smart home accessories, with frequent bugs and connectivity issues plaguing the experience. Still, though, this CES has been high on hardware products and less focused on the Matter protocol itself, unlike the last few years. Here are some of the gadgets and announcements I found most intriguing.

Ecobee launched a cheaper smart thermostat to join its lineup of what I think are the best HomeKit-compatible thermostats, alongside the Matter-enabled second-generation Nest Learning Thermostat. The new one, which costs $130, has all the smart features of the premium models but lacks a few bells and whistles, such as the air quality sensor. It can be paired with Ecobee’s SmartSensors, sold separately, but doesn’t support Matter, which Ecobee promised to do in 2023. (It still supports Google Home, Amazon Alexa, HomeKit, and Samsung SmartThings, so take Matter’s omission with a grain of salt.) I think it’s the best smart thermostat for beginners just getting acquainted with a smart home.

HDMI 2.2 brings 4K resolution at 480-hertz with 96 gigabits per second of bandwidth. The new protocol, developed by the HDMI Forum and called Ultra96 HDMI, also includes a latency indication specification to allow connected devices to communicate with each other and compensate for lag. The HDMI Forum intends for it to mainly be used for audio receivers and says that it performs better than HDMI-CEC, which enables the same cross-device communication in the current HDMI 2.1 specification. HDMI 2.2 cables will begin shipping later this year.

Schlage, the renowned door lock maker, announced a new ultra-wideband-powered smart lock with a twist. While some smart locks use Bluetooth Low Energy and near-field communication to communicate — such as Schlage’s own Encode Plus lock, which works with Apple’s home key — Schlage’s latest, the Sense Pro, uses the ultra-wideband chip in certain smartphones to detect when a user is nearing their door lock and automatically unlock it for them. This is possible due to ultra-wideband precision; the technology is used in Apple’s Precision Finding feature, proving its reliability. I don’t think pulling out my phone and holding it against my door is very cumbersome, but this could potentially be useful when my hands are full. The company says the Sense Pro will be available in the spring.

The Schlage Sense Pro. Image: Schlage.

The Schlage Sense Pro. Image: Schlage.

Aqara is launching a 7-inch wall-mounted tablet and home hub combo it calls the Panel Hub S1 in addition to the Touchscreen Dial V1 and Touchscreen Switch S100, three unintuitive names for products that aim to act as souped-up light switches. The devices can be installed in lieu of light switches to control smart home devices connected via a home’s local Thread and Matter networks. This is the promise of Matter: interoperability so that any device can tie into a smart home ecosystem without connecting to one of the big three platforms. Each device features a touchscreen, but the Panel Hub S1 has the largest. It reminds me of Apple’s rumored HomePod with a screen, except perhaps much cheaper. The Dial V1 has a scroll wheel to control devices, and the Touchscreen Switch occupies the space of one switch with a screen for more details. All three products are shipping in the first quarter of the year.

Image: Aqara.

Image: Aqara.

Google announced Gemini is coming to third-party TVs via Google TV, the company’s smart TV software that certain TV manufacturers like Hisense pre-install on their devices. Gemini previously was confined to the Google TV Streamer, Google’s latest set-top box that replaced the Chromecast to much chagrin last year, but now the company is bringing it to all Google TV-enabled televisions. I think this makes more sense than Copilot because Google TV in and of itself is a streaming platform with its own recommendation engine, so Gemini could answer questions about certain items or recommend what to watch.

The Star of the Show: Nvidia

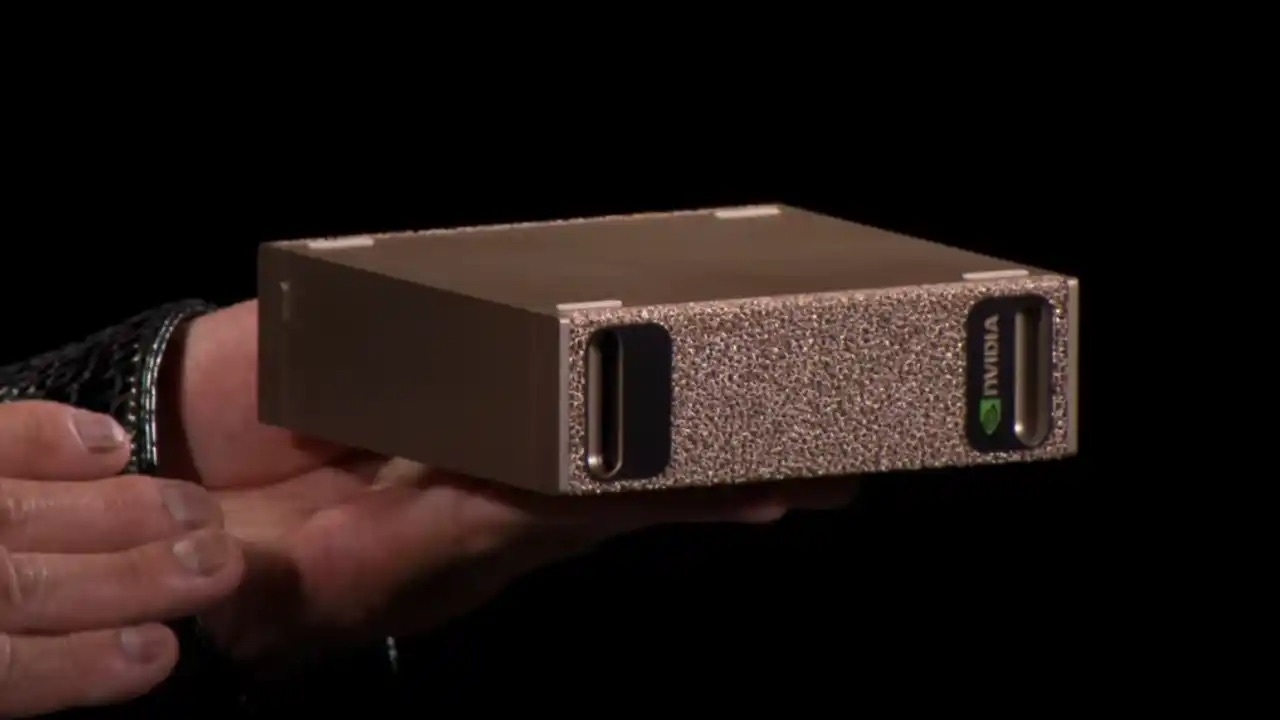

Nvidia’s Project Digits.

Nvidia’s Project Digits.

Nvidia’s Monday evening presentation was perhaps the most exciting, hotly anticipated event of the day. The keynote attracted attention like I have never seen in recent CES history, with nearly 100,000 people tuning in on the live stream and 14,000 attending in Las Vegas — 2,000 above the capacity limit of the arena. Nvidia, after the launch of ChatGPT and its subsequent competitors, quickly rose to become the most valuable technology company due to its GPUs used for AI training. At CES, the company announced its latest gaming GPU line, the RTX 50-series, as well as other AI-focused processors.

The RTX 50-series GPUs are powered by Nvidia’s Blackwell processor architecture. The new highest-end card, the RTX 5090, can perform up to 4,000 trillion operations per second, 380 ray tracking tera floating point operations per second (10 to the 12th power), and has a 1.8 terabytes-per-second memory bandwidth. The company claims the 5090 is two times faster than its predecessor, the RTX 4090, in gaming tasks thanks to so-called tensor cores — components of the card reserved for AI processing — and the next generation of Nvidia’s deep learning super sampling, or DLSS, AI-powered upscaling.

But perhaps the more awe-inspiring part of the keynote is when Jensen Huang, Nvidia’s chief executive, said the RTX 5070 — currently the lowest-end card in the lineup — matches the RTX 4090’s performance in most tasks. For context, the 4090 is currently the most performant graphics processor in the world and takes up an enormous amount of volume in a computer case, but if Nvidia is to be believed, the lowest-end, smallest card in its flagship lineup now matches its performance. That’s bananas.

Nvidia announced pricing for the new cards, too: $2,000 for the RTX 5090, $1,000 for the 5080, $750 for the 5070 Ti — a slightly upgraded version of the 5070 — and a mind-boggling $550 for the 5070. The highest-end 4090 from last year cost $1,500, meaning new buyers can save $1,000 and get an equally performant card. This feat has even made Huang claim that his company’s processors are defying Moore’s Law, a concept in computer science that states the number of transistors in a processor doubles every two years. I am unsure if such a bold claim is true, but either way, Nvidia’s latest processors are incredible, and Huang mentioned many times during the keynote that it wouldn’t be possible without AI, which now does all the heavy lifting in upscaling.

The company also announced a plethora of large language and video models designed to generate synthetic training data for new, smaller models. The language models are based on Meta’s Llama 3.1 and are called the “Llama Nemoneutron Language Foundation Models,” and they are fine-tuned for enterprise use and generating training data. Nvidia calls the video model Cosmos, and it says it is the first AI model that “understands the real world,” including textures, light, gravity, and object permanence. (Nvidia Cosmos was trained on 20 million hours of video to achieve this, but I wonder where the video came from.) Both models aim to help Nvidia achieve infinite AI scaling by feeding smaller models data generated by the advanced ones. For instance, Huang said Nvidia Cosmos could simulate “millions of hours on the road” with “just a few thousand miles” to feed a self-driving computer because not every simulation can be created in the real world.

This composed the overarching theme of Nvidia’s presentation: scrape the entirety of human knowledge and use it to generate more. But I have always thought of this strategy like AI inbreeding, as crude as that may sound. If the quality of training data is poor, the output also will be, and the vicious cycle continues until the result is nonsensical. Each pass through a model adds distortion — it’s like children playing a game of telephone. But Huang says that this is the reason AI has no wall — whether he and his company should be believed is only a test of time. But while Nvidia Cosmos and the Nemoneutron LLMs are available for public use — and open-source on GitHub — they are aimed at enterprise customers to run on Nvidia processors to develop their own models.

To create these models, Nvidia needed a lot of compute power, so it built a new supercomputer architecture called Grace Blackwell, powered by “the most powerful chip in the world,” according to Nvidia. The processor, which has 130 trillion transistors, is not intended for purchase, but Nvidia scaled down the architecture to Grace Blackwell to a Mac mini-sized $3,000 supercomputer available for consumers. The supercomputer, called Project Digits, is the “world’s smallest AI supercomputer,” according to Nvidia, and is capable of running 200 billion-parameter models. The computer is powered by the GB10 Superchip and features 128 GB of unified memory, 20 efficiency cores, and up to 4 TB of storage, together achieving one petaflop of performance.

The announcement of Project Digits and Grace Blackwell was probably the most exciting part of Monday at CES. The promise of a personal supercomputer has always been elusive, and this time, it genuinely appears as if it will be available soon. Nvidia says Project Digits will be available for purchase in May, and the RTX 50-series in the first half of 2025.

The first day of CES is always packed, but this year’s conference felt off. Much of it felt like a rehashing of last year’s show. Perhaps that’s much me, but the vibes are underwhelming.

About Meta’s Outrageous Apple DMA Interoperability Requests

Don’t pretend this is about choice

Foo Yun Chee, reporting for Reuters:

Apple on Wednesday hit out at Meta Platforms, saying its numerous requests to access the iPhone maker’s software tools for its devices could impact users’ privacy and security, underscoring the intense rivalry between the two tech giants.

Under the European Union’s landmark Digital Markets Act that took effect last year, Apple must allow rivals and app developers to inter-operate with its own services or risk a fine of as much as 10% of its global annual turnover.

Meta has made 15 interoperability requests thus far, more than any other company, for potentially far-reaching access to Apple’s technology stack, the latter said in a report.

“In many cases, Meta is seeking to alter functionality in a way that raises concerns about the privacy and security of users, and that appears to be completely unrelated to the actual use of Meta external devices, such as Meta smart glasses and Meta Quests,” Apple said.

Meta hasn’t released these interoperability requests itself, leaving the onus on Apple to truthfully represent Meta’s interests, but Andrew Bosworth, Meta’s chief technology officer, alluded to what they might be about on Threads:

If you paid for an iPhone you should be annoyed that Apple won’t give you the power to decide what accessories you use with it! You paid a lot of money for that computer and it could be doing so much more for you but they handicap it to preference their own accessories (which are not always the best!). All we are asking for is the opportunity for consumers to choose how best to use their own devices.

It’s obvious that Meta wants its iOS apps to interact with Meta Quests and glasses (“accessories”) better and more intuitively. But let’s look at the list of features Meta asked for through interoperability requests, as written in Apple’s white paper titled “It’s getting personal”1 as a response to the European Commission, the European Union’s executive agency:

- AirPlay

- App Intents

- Apple Notification Center Service, which is used to allow connected Bluetooth Low Energy devices to receive and display notifications from a user’s iPhone

- CarPlay

- “Connectivity to all of a user’s Apple devices”

- Continuity Camera

- “Devices connected with Bluetooth”

- iPhone Mirroring

- “Messaging”

- “Wi-Fi networks and properties”

Apple puts the list quite bluntly in the white paper:

If Apple were to have to grant all of these requests, Facebook, Instagram, and WhatsApp could enable Meta to read on a user’s device all of their messages and emails, see every phone call they make or receive, track every app that they use, scan all of their photos, look at their files and calendar events, log all of their passwords, and more. This is data that Apple itself has chosen not to access in order to provide the strongest possible protection to users.

Third-party developers can accomplish most of what they want from these iOS features with the application programming interfaces Apple already provides. They can use AirPlay to cast content from their apps to nearby supported televisions, use App Intents to power widgets and shortcuts, use APCS to display notifications from a user’s iPhone on a connected device, make apps for CarPlay, use Continuity Camera in their own Mac apps, view devices connected via Bluetooth, send messages with embedding logging using the UIActivityViewController API, and view details of nearby Wi-Fi networks. All of this is already available within iOS with ample developer and design documentation.

For instance, if Meta wanted to create an easy way to set up a new pair of Meta Ray-Ban glasses, it could use the new-in-iOS-18 API called AccessorySetupKit, demonstrated at this year’s Worldwide Developers Conference to display a native sheet with quick access to Bluetooth, near-field communication, and Wi-Fi. There’s no need to get access to a user’s connected Bluetooth devices or Wi-Fi networks — it’s all done with one privacy-preserving API. As Apple puts it in its developer documentation:

Use the AccessorySetupKit framework to simplify discovery and configuration of Bluetooth or Wi-Fi accessories. This allows the person using your app to use these devices without granting overly-broad Bluetooth or Wi-Fi access.

From this Apple-presented feature interoperability list, I can’t think of much Meta would want that isn’t already available. The only features I can reasonably understand are iPhone Mirroring and Continuity Camera, but those are Apple features made for Apple products. Meta could absolutely build a Continuity Camera-like app that beamed a low-latency video feed from a connected iPhone to a Meta Quest headset, as Camo did for Apple Vision Pro. That’s a third-party app made with the APIs Apple provides today, and it works flawlessly. Similarly, a third-party iPhone Mirroring app called Bezel on visionOS and macOS works like a charm and has for years before Apple natively supported controlling an iPhone via a Mac. These apps aren’t new and work using Apple’s existing APIs.

Meta’s interoperability requests are designed as power grabs, much like the DMA is for the European Commission. At first, it’s confusing to laypeople why Meta and Apple feud so often, but the answer isn’t so complicated: Meta (née Facebook) missed the mobile revolution when it happened in 2009, was caught flat-footed when social media blew up on the smartphone, and suddenly found itself making most of its money on another company’s platform. Mark Zuckerberg, Meta’s founder, isn’t one to play anything but a home game, so instead of working with Apple, he actively worked against it for the last decade. Facebook changed its name to Meta in 2021 to emphasize its “metaverse” project — now an artifact of the past replaced by artificial intelligence — because it didn’t want to play on another company’s turf anymore.